Chapter six

Gems in the junkyard

The idea that holography might be a useful metaphor for understanding memory in the human brain was first proposed by the Dutch optical physicist, Pieter Jacobus van Heerden, in April, 1963, in two back-to-back papers in the Journal of Applied Optics. At the time he was with Polaroid in Cambridge.

Van Heerden presents in the second paper a technology for the optical mass storage of holographic images in a solid block of photosensitive medium. In both papers he describes a technique for the nearly instantaneous retrieval, from the solid, of holographic images. There is no sorting, no indexing. It is a “content addressable” memory. Presented with one sample image, the van Heerden system can instantly identify any similar image in storage, and project it.

The image to be matched does not have to call up an exact copy – only a similar image. Degrees of similarity can be detected and sensed. A simple threshold detector can be set to retrieve only a few images of the very highest similarity. Or it could be set unselectively, to retrieve many sort-of-similar images.

The system will also work for a larger image containing a similar image – a single face in the class picture. Having invented hardware for an optical memory, van Heerden recognized and explained that the machinery he had conceived was able to do pretty much what the brain does. Here is a review of a modern optical storage and recognition system of a very similar type, designed to recognize fingerprints .

In as little as 400 milliseconds, a human being can recognize a face in a picture of a crowd and push a button to signal this recognition. Van Heerden was quite interested in the face-in-a-crowd feature of his invention. Seven years later, in 1970, van Heerden got into a little public skirmish about it with a connectionist in an exchange of letters published in Nature. The connectionist had written an article urging that neural nets could accomplish everything then claimed for holographic memories.

Van Heerden responded that a holographic memory could do something the neural nets could not do – recognize and pick faces out of a crowd. The connectionist replied that these were early times (1970) – too early to say what one system might do and what another might not do.

A holographic memory can indeed, in the hardware version, recognize a face like lightning. Of course both optical holographic hardware and neural net software operate, at the wafer level, at the speed of light.

In his original papers Van Heerden suggested some reservations about too literally projecting his optical memory onto the brain – and they are, in retrospect, familiar objections. “The quantitative theory points out the necessity of maintaining exact phase relationships in the waves over long distances. Any change in the speed of propagation in one part of the neuron network compared to the speed in another part would scramble the information in a hopeless way. It seems therefore necessary to assume a calibrating system of pulses in the brain, which at all times checks this and compensates, if necessary, the speed of propagation…”

“Maintaining exact phase relationships” is the core principle of holography. The difficulty of accomplishing this in the brain dogged the biological holographic memory theory from the beginning, and in the end it was probably this problem – inexact phase relationships in the brain — that pegged it as an implausible idea.

Van Heerden and Stephen Benton were well acquainted, and there is a little bit of biographical material about van Heerden in an essay of Benton’s on the history of holography. For a thorough summary of van Heerden’s thinking about memory in the brain, and for the best overall history of the idea that a hologram might be analogous to human memory, see Chapter 7 of the wonderful book, Metaphors of Memory by Douwe Draaisma.

Karl Pribram, Karl Lashley, and Donald Hebb

The idea that memory is holographic was independently conceived and advanced a second time, in 1969, in an article in the Scientific American, by Karl Pribram, a researcher and neurosurgeon who was then at Stanford. The emphasis of the Scientific American article was on the holographic mode of storage, rather than retrieval. Pribram focused on the fact that holographic storage is distributed. Any tiny part of the film on which a hologram is recorded can be used to reproduce the whole image.

Karl Lashley had suggested in his famous essay, In Search of the Engram, that the brain must store a memory image everywhere, throughout its substance, rather than in localized areas or specific circuits. A brain can be wounded or physically damaged in various ways, sometimes massively damaged – and yet retain its power to remember images. Lashley concluded that “It is not possible to demonstrate the isolated localization of a memory trace anywhere within the nervous system. Limited regions may be essential for learning or retention of a particular activity, but within such regions the parts are functionally equivalent. The engram is represented throughout the region.” [Italics added].

Karl Lashley had suggested in his famous essay, In Search of the Engram, that the brain must store a memory image everywhere, throughout its substance, rather than in localized areas or specific circuits. A brain can be wounded or physically damaged in various ways, sometimes massively damaged – and yet retain its power to remember images. Lashley concluded that “It is not possible to demonstrate the isolated localization of a memory trace anywhere within the nervous system. Limited regions may be essential for learning or retention of a particular activity, but within such regions the parts are functionally equivalent. The engram is represented throughout the region.” [Italics added].

It was Karl Lashley who, in 1942, first suggested the idea that a memory might be stored in distributed fashion in the form of an interference pattern. He died in 1958.

Karl Pribram (1919-2015), who had worked with Lashley, recognized in the late 1960s that the holographic technique provided a physical model that could explain the apparent distribution of memory throughout the medium of brain tissue. In his long, intriguing retrospective essay, Brain and Mathematics, Pribram recalled the origin of the idea that memory might be stored as an interference pattern – and also, perhaps, the origin of the deepset conflict between holographic memory theory and the Hebb hypothesis.

Hebb’s idea, which was the sourcepoint of the dominant view of memory today, was that the nervous system rewires itself (though facilitation at the synapses) in response to new experience, and that the “grooved in” or facilitated new pathway constitutes the memory. According to Pribram’s very politely phrased account, Lashey could not buy it:

“Lashley (1942) had proposed that interference patterns among wave fronts in brain electrical activity could serve as the substrate of perception and memory as well. This suited my earlier intuitions, but Lashley and I had discussed this alternative repeatedly, without coming up with any idea what wave fronts would look like in the brain. Nor could we figure out how, if they were there, how they could account for anything at the behavioral level. These discussions taking place between 1946 and 1948 became somewhat uncomfortable in regard to Don Hebb’s book (1948) that he was writing at the time we were all together in the Yerkes Laboratory for Primate Biology in Florida. Lashley didn’t like Hebb’s formulation but could not express his reasons for this opinion: ‘Hebb is correct in all his details but he’s just oh so wrong’. “

In subsequent decades Karl Pribram worked so long and faithfully to develop and make visible and plausible the idea of a holographic memory that most people associate this theory most strongly with Pribram. Until recently, when I read Metaphors of Memory, I did not know the theory had had an earlier protagonist, van Heerden.

Holographic memory: the hardware version

Holographic images on film are memories of a sort. One could say this of any type of photographic image on film, but holograms are special. They are 3-dimensional, but there is much more to the metaphor.

Let’s suppose you record on film a holographic image of an ordinary object, perhaps a book on a tabletop.

The details of the setup are important.

Between your eyes and the book, you would place a film holder.

To illuminate the book, in order to take this hologram, you could scatter the light of a laser beam off of another object in this scene — so let’s place a coffee cup next to the book. The laser can be mounted on the tabletop at your right hand, so you can aim it into the scene obliquely. Note that the laser should be aimed at the coffee cup, not directly at the film. Turn on the laser, to shoot the picture, then turn it off.

Now remove both the book and the coffee cup. Leave the laser in place. Develop the film; re-mount it in the same film holder. And then, finally, turn on the laser once again. The developed film is a hologram, but nothing appears when you project it.

Now pick up the book and return it to its original position in the scene, so that it reflects light back through the film. Immediately an image of the coffee cup appears. And it will appear “lifelike,” in three dimensions, quite indistinguishable from the real coffee cup. If the coffee was steaming when you took the picture, the steam will be recreated too.

Through the physical mechanism of the hologram, one object (the book) has somehow conjured up the recorded image of another object (the coffee cup) that has been associated with it in the past.

When the real book is removed from the scene, the image of the cup will vanish. If we now carefully replace the holographic image of the cup with the real cup, an image of the book will appear.

This is a magic show. But the idea of bringing back, from storage on film, the fully realized image of one object — simply by introducing into the scene some other object that has been associated with it in the past — this seems an intuitively exact model of how our memory works. Think of an old stamp and your memory conjures up an album. Think of a collar and your memory fills in the dog.

So holography can be used to give a splendid demonstration of a content-addressable associative memory.

The book-and-cup demonstration depends on a coherent light beam and upon precise positioning and re-positioning of the objects.

In this system, however, coherent light that has been scattered from each object is acting as a phase reference beam for light scattered from the other object. In this sense it is a little more like the real world than most holographic setups. More typically a pure reference beam – never scattered — is used as a phase yardstick. In the book and cup demonstration, the reference beams are impure, worldly. Each object “references” the other.

Distributed Memory understood as a lens

Suppose you snapped a holographic picture of a coffee cup. But this time, use a pair of scissors to snip the film into a hundred little squares.

Any tiny square of the film can be set up and illuminated with a coherent laser light to reproduce the image of the whole coffee cup. Somehow, the image of the cup has been stored on the film in distributed fashion, so that all the information needed to recreate the image of the cup is stored in each tiny square of the film.

It’s less mystifying that it seems. Recall the insight of the physicist Gordon L. Rogers. A hologram can be thought of as a lens created within the substance of the film. This lens focuses the illuminating light of the laser to form the image we see as a coffee cup. In other words, a hologram is a form of lens that specifies a particular image.

Consider for a moment an ordinary glass lens that has been broken into several pieces: thin spectacles that have fallen on a sidewalk, for example. Any little piece of a lens you might pick up and peer through still acts as a perfectly good lens. In the same way, any little piece of a hologram will suitably focus a laser to recreate the image stored on the film.

The brain can be broken or cut to pieces by injuries or disease or surgical procedures. The memory survives intact. Whole segments of the cerebral hemispheres can be cut out and discarded. Memory still clicks.

Karl Pribram remarked in interviews that in some experiments, as much as 98% of an animal’s neuronal wiring could be severed in the visual pathway – yet the animal persisted in recognizing, from memory, an image.

How can this be? The holographic concept provides a model which explains such effects. If memory works like a lens, or hologram — if it is stored as an inteference pattern — then the memory is distributed. Each and every “part” of the brain contains the whole of the memory. Thus a memory cannot be extinguished until almost 100% of the tissue is cut away. In the theory as presented, incidentally, the “parts” of the brain are evidently understood to be neurons – not just cells in general.

Distributed memory storage is quite familiar as a biological concept. We know that the genetic memory can be divided and redivided by mitosis and yet remain intact. Each cell of every multicellular organism contains, in its DNA, all the information necessary to reproduce the entire organism.

A telling point for connectionism

Distributed memory was the strong suit of the holographic memory theory. The same feature, however, can be accounted for more readily in neural nets, in which a specific memory can be said to be stored across multiple synapses. Moreover, it is possible to visualize and demonstrate how the neural net mechanism works.

With the holographic model, the distributed memory store is contained in an interference pattern. This idea works fine for light sensitive film — but it is hard to see how it could work in the nervous system. Since no wave interference could occur on the digital part of the nerve, the axon, it was urged that the dendrites provided places where electrical wave interference might occur. The idea of wave interference in the nervous system is attributed to Karl Lashley but it was elaborated by R.L. Beurle, who published it in 1956 in the Philosophical Transactions of the Royal Society of London. But it must have seemed a nebulous argument or at best, impossible to prove.

In contrast, the principle of distributed storage in neural nets is easy to illustrate with a breadboard circuit, and to see and admire in action on the screen of your computer.

Historically, it is clear that the evident superiority of neural nets in explaining the distributed storage of memory kicked one of the strongest props out from under the classical version of holographic memory theory. From reading Douwe Draaisma’s Metaphors of Memory I have formed the impression that this was an important turning point, or fork in the road, in this science. Neuroscientists he interviewed who lived through that era recalled that they were strongly impressed by the principle of distributed storage urged by the holographic memory theorists. When neural nets turned out to offer a distributed storage system based on a different, somewhat more comprehensible principle, they recognized the concept and crossed over to connectionism.

Let me emphasize that neural nets do not offer a superior solution to the problem of distributed memory. They do not. What connectionism provided, in the 1970s, was a more plausible explanation than the holographic model, given the textbook neuron. I think the holographic idea stalled out, around 1973, because a believable and biologically realistic model for a holographic memory could not be constructed using 1-channel, all-or-none neurons. The all-or-none nervous system was “not analog enough” and not synchronous enough and not fast enough to support holographic processing.

If we posit a multichannel neuron, however, the classical holographic memory concept, or at least the underlying principle of storing interference patterns as distributed memory, becomes plausible and accessible once again. We cannot reset our thinking to 1973, but we can see these shelved ideas about distributed memory storage in a new light, and see what we might cherry pick from this once very influential collection of concepts.

Memory storage and retreival

In addition to distributed storage, the holographic memory theory had several other intriguing features we should revisit. These advantages followed from the application, in the theory, of the Fourier transform.

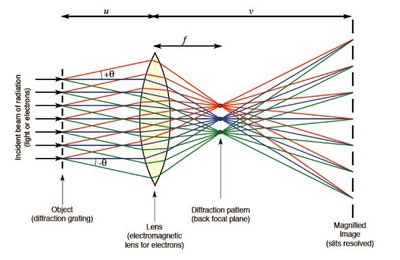

We can be certain that the animal ability to perform a Fourier transformation evolved as soon as nature created the first lens. The transform is accomplished at light speed between the diffraction plane and the image plane of a lens. The lens of course does not calculate like we do. The Fourier transform is just a mathematical description of what the lens does to light waves.

One speculates that the power to do both the Fourier transform and to reverse its effect, by the inverse transform — by other analog means, inside the brain — is probably ancient as well, and may in fact antedate the lens.

In any event the Fourier transform, understood as a biological process and a recurring theme in natural history, is vastly more ancient than Fourier himself, the revered French mathematician who worked through the technique by hand for the first time in the 19th century.

The Fourier transform was the read-in/read-out machine for the theory of holographic memory. The transform converts a literal image into an interference pattern. Run it again, inversely, and it converts an interference pattern (a memory, per the theory) back into a literal image. Here is a delightful example, using a literal image of a duck:

And here is its Fourier Transform, an interference pattern that contains the same information as the literal image, but encodes the duck in a different way:

The Fourier duck, and the method of portraying the transform in color, is to be found at Kevin Cowtan’s Book of Fourier, along with several other playful Fourier creatures and transforms.

The Fourier duck, and the method of portraying the transform in color, is to be found at Kevin Cowtan’s Book of Fourier, along with several other playful Fourier creatures and transforms.

The transform can be accomplished by calculation, but it can also be accomplished with hardware – by a lens.

In addition to transforming images for storage, and reverse transforming memories into images, the Fourier transform can be used to accomplish two other useful things: image cleanup, and feature recognition.

The problem of image cleanup

Light must pass though a tangled nest of the eye’s own wiring on its way to the photoreceptors of the vertebrate retina. These structures are fairly transparent and do not completely block the view (we can see, after all) but because they are inhomogeneous they scatter incoming light.

For example, in the 200-micron thick turtle retina, scatter increases the diameter of an incoming parallel beam from 8 microns as it enters the retina to 50 microns at the level of the outer segments. In anatomical terms this means a neat incoming beam the diameter of a single photoreceptor will cone out, in its passage through the retina, to illuminate 5 photoreceptors. In the process the intensity at the center of the beam diminishes by tenfold.

The result is a degraded, blurry image. Turtles have an especially thick retina but all vertebrates eyes must solve, or live with, the problem of image degradation from light scattering from the tissues positioned ahead of the photoreceptors.

Click here for a really excellent SEM of the retina; This photo is on the U. Delaware’s online histology site. It shows clearly the positioning of the photoreceptors near the bottom strata of the retina, the thick layer of jello salad overhead, and the photoreceptors’ apparent orientation — pointed at the backwall of the eye. It is commonly said, for this reason, that the vertebrate retina is “wired from the front.”

The Shower Glass Effect

The neatest way to clarify the view obstructed by intervening nerves, vasculature and glia would involve an application of the Fourier transform. The idea relies on a principle called the Shower Glass Effect. Imagine a three dimensional hologram taken of a bather who is obscured by frosted or opaque glass, taking a shower. Because the hologram is 3-dimensional, the observer, or voyeur, can change points of view. By moving far enough to one side, the observer can simply look behind the glass panel. The clear view of the bather is obtained even though the holographic recording system was staring directly at an opaque, distortion inducing glass shower door. What this tells us is, the bather is included in the information captured and stored in the hologram. The hologram can be processed – filtered – in such a way that the intervening visual obstruction is removed.

Software solutions that can deblur an image are useful in forensics and in improving the optical clarity of military intelligence photographs shot from space, through the opaque screen formed by miles of air intervening between the camera and its target. Mathematical image clean-up is similarly useful in astronomy.

Software solutions that can deblur an image are useful in forensics and in improving the optical clarity of military intelligence photographs shot from space, through the opaque screen formed by miles of air intervening between the camera and its target. Mathematical image clean-up is similarly useful in astronomy.

One argument for some sort of Fourier processing in the mind is that we all stare at the world through a showerglass all the time. The showerglass is the messy intervening layer of nerves and tissue that obscures all but a tiny spot on the retina from the rest of the world, and visa-versa. Somehow, the brain sees around all this goo, and successfully “subtracts it out” of every scene.

Fourier filtering, feature detection.

Photographic images can be converted into interference patterns, and then converted back into an image, using the Fourier transform. A transformed photo, which is an interference pattern, can be filtered in certain ways and then Fourier transformed back into a snapshot. The effects are fascinating. These techniques can be used to improve clarity, enhance fine detail, and to detect, in aerial surveillance photos, recurring patterns of high spatial frequency (e.g., straight lines not of natural origin, like gun barrels or fuel barrels).

One of the most interesting capabilities is edge detection. Using the transform, one can in effect turn a person into a cartoon – distinguishing a person from the photographic background with a clear outline.

Richard Gregory, in his classic book Eye and Brain, writes extensively about the brain’s hunger for objects. He shows with optical illusions that the brain will insist on discovering objects, even in scenes where none exist. The Fourier transform is a handy technique for sorting objects away from background.

FOURIER OPTICS LINKS AND RESOURCES

We are interested here in Fourier filtering because we suspect the brain does Fourier filtering routinely – and that it has been developing this skill for many millions of years. Mixing, matching, filtering and transforming images are, one speculates, the core brain processes that underly discerning, thinking, remembering, and imagining. Conscious thought may have evolved from the most basic Fourier filtering task — cleaning up an image.

Where to start

As John Brayer remarks on his helpful site, the best way to get a feel for this technology is by looking at lots of images in tandem with their Fourier transforms. Scroll down to the photos of the lady in the hat.

Learning Fourier Optics

A detailed journal kept by a gifted student over a summer of studying Fourier optics and image formation. She identifies the most accessible book on Fourier Optics (Steward) and lists links to the most useful net resources on the subject.

If you decide to use Steward, I would suggest you start on pages 106-116, which should probably have been the introduction, and then back up to the beginning.

Fourier filtering sites

Most of the rest of these links show examples of Fourier Filtering. Some of the presentations are rather mathematical, but if you prefer you can scroll past the math — concentrate on the photos.

To apply a Fourier filter, you start with a snapshot. This literal image is then Fourier transformed into an unintelligible but ordered pattern “in the frequency domain. ” The Fourier transform can be accomplished optically or by a computer. Fourier Filtering consists of carefully modifying the (still unintelligible) image – and then Fourier transforming it back into a literal image of the original snapshot. When the second transform is completed the resulting image from the snapshot will look a little different – sometimes much better. The edges of the object or person in the photo may be strongly enhanced – the whole picture may appear much crisper and better resolved.

The Hypermedia Image Processing Resource is one of the best links for showing what can be accomplished with image filtering, etc. Be sure to click on the small pics.

Here is their discussion of frequency filtering. “Frequency filters process an image in the frequency domain. The image is Fourier transformed, multiplied with the filter function and then re-transformed into the spatial domain. Attenuating high frequencies results in a smoother image in the spatial domain, attenuating low frequencies enhances the edges.”

The photos show what this means in practice. When they say “frequency” they are talking about spatial frequency, for example, the coarseness or fineness of a periodic object like a pocket comb or a diffraction grating. Again, scroll down. Be sure to click on the postage stamp sized photos to enlarge the images.

Finally here is an online short course on Image processing fundamentals.

Scroll past the mathematics to the images and transforms of the girl in the hat (a different girl, another hat). Notice the effect on these images of selectively isolating phase information and magnitude information.

Summary

What ideas or features can be salvaged from the old Holographic Theory of Memory?

- Distributed storage of a memory as an interference pattern;

- Memory storage and retreival by Fourier transformation back and forth between a stored image and its interference pattern;

- Fourier filtering and image processing for object recognition, feature extraction and image cleanup.

And what have we lost?

It appears we lost Holography.

Because we have assumed the retina conserves spatial phase, we can dispense with the notion of a reference beam. We are now free to relax the requirements for a fixed wavelength and perfect coherence – laser properties. Let’s see if we can now construct a memory that works, as vision does, for moving objects illuminated by white light of only partial coherence — the objects and light of the everyday world.