Chapter four

Eye Memory

John von Neumann was a genius like no other. In 1938, in middle life, von Neumann transformed himself into a weapon designer, arguably the best one of his century. In the late 1940s and early 1950s, he was working fast to perfect the digital computer. He was fascinated by it but for him it was a necessary intermediate step – a tool or fixture. He needed the computer to run the monumental Monte Carlo calculations for the hydrogen bomb.

By coincidence, this was an heroic period for neurophysiology. Beginning in 1949 at Woods Hole and Plymouth, the newly invented voltage clamp, applied to the squid nerve’s large diameter axon, was revealing for the first time, in splendid detail, the workings of the axon membrane as a system to govern and gate the flow of ions. It seemed the problem of how nerves conduct impulses was tumbling.

Von Neumann hoped to discover any and all useful analogies between the human brain and nervous system and the electronic brain he was trying to perfect. He actively sought the opinions and expertise of neurophysiologists. He evidently believed most of what they told him – though it is interesting to notice what piqued his skepticism.

It was his strange sojourn in the land of neurophysiology – another country – that he later reported in his book, The Computer and the Brain. He wrote it in 1956-57, in the last year of his life. It was conceived as a series of lectures but published posthumously as a long essay.

What he did not say.

Perhaps the most famous and often quoted line in this book appears at the beginning of Part II, where von Neumann declares that “The most immediate observation regarding the nervous system is that its functioning is prima facie digital.”

The “prima facie” modifier is commonly taken to mean von Neumann saw the brain as “obviously digital,” or “patently digital,” and that the brain therefore must resemble a digital computer. You tend to hear this quote from people who believe, or want to believe, exactly that.

But as you read The Computer and Brain you will quickly discover that this is not what John von Neumann intended. Von Neumann used words precisely and to him, “Prima facie” meant “on its face.”

In 1956, the brain appeared, on its face, to be a digital machine. But von Neumann thought this impression might be misleading. He thought that deeper biological investigation might well demonstrate that the nervous system is not, in fact, digital, or not completely digital.

He suggests that perhaps some intermediate signaling mechanism, a hybrid between analog and digital, might be at work in the brain. For this and other reasons he actively resisted labeling the brain as a digital computer.

The nerve as a hybrid device

Hybrid computers – part analog, part digital – were transitional between the analog computers of the 1940s and the fully digital computers of the 1960s. When von Neuman wrote his essay on the brain in 1956 and 1957, analog/digital hybrid computers were part of his technical repertoire. They were still available as commercial products in the 1970s.

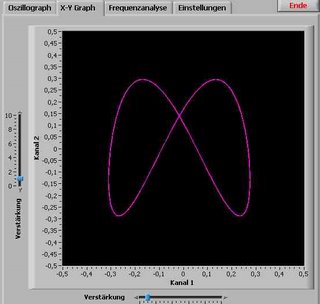

The analog component was fast and easy to use, but imprecise. The response of an analog electronic circuit is readily described mathematically; hence, analog electronic circuits can be used to describe or depict mathematics.

Analog computation is easy to visualize with an oscilloscope. An analog circuit can be made to draw a curve on the gridded screen, typically depicting voltage versus time. By dialing numeric values into variable components in the circuit under observation (variable resistors, capacitors, inductors) one can draw curves to represent mathematical functions. Straight lines, parabolas, hyperbolas, sine waves, circles, ellipses, Lissajous curves, 3-space objects, whatever you need. You can then pick numerical points off the desired curve — graphic solutions.

To “hybridize” this analog computer, add a digital memory and processor – in today’s parlance, an A-to-D converter. Sample the analog voltage or voltages at a point in time, store the result in a digital register. You can then perform digital calculations, or go back to the analog system for another quick read of a point on a curve.

Not surprisingly, the idea of the hybrid analog/digital system was projected onto the nervous system.

The nerve is functionally and anatomically split. The nerve cell appears to be an analog device on its input side, that is, in the dendrites. It is widely assumed that in the dendrites, incoming voltages may be buffered, summed and subtracted – even seen to depict perhaps, a curve.

Beyond the axon hillock, the nerve must be viewed as a digital device. The axon either fires or does not fire. It conveys a purely digital signal.

Not digital in the sense of a binary code — not a one or a zero. Digital in the sense of a finger sticking up — the famous “all-or-none” voltage spike.

The nerve conserves analog input information encoded as the interval between the digital output spikes. Or so it seemed in 1956.

Possibly this is what Von Neumann meant by “a hybrid of analog and digital”. The hybrid neuron idea identified the dendrites as an analog signal processor, and the axon as a digital transmission line.

A variable voltage level could be sampled by dendrites, somehow converted into a launching schedule for spikes on the axon, and then finally transmitted along the axon as a traveling time interval between two strong digital output signals. (We no longer believe much of this stuff, and haven’t since about 1995 — but in von Neumann’s time it was bedrock.)

Incidentally, this idea that the dendrites could be an analog signal processor appealed very strongly, in later decades, to theorists who thought the brain’s memory might work like a hologram – mainly because they needed to locate, so very badly, an analog signal processor of some kind, somewhere.

A different kind of hybrid

There exist other types of hybrids of analog and digital signal characteristics. One such hybrid is presented in Chapter two: the multichannel neuron.

A single nerve impulse traveling along a multichannel axon is digital on its face – it is a spike — but it communicates to the brain analog gradations denoted by channel numbers, 1, 2, 3… n.

We don’t know what n might be, but we have been using 300 as an anatomically plausible number of channels. It isn’t a purely analog system, because the steps between channels are finite – represented by integers in fact. It is an “incremental analog” system perhaps, with the specific increment denoted by the channel number. Because each pulse is all-or-nothing – always a digital “1” in effect — it is a solidly reliable signal, indifferent to noise.

Notice the surprising computational possibilities for this type of hybrid system. It could use numbers almost like we do, to measure, compare, count and tally. It could be set up to add, subtract, multiply, divide — perhaps even to convolve. It could also use sliding scale, rubber scale, and scale-combining operations to produce logarithmic and vernier results.

In the Corduroy Neuron model the dendrites, though superficially analog devices, have become rather mysterious – one must guess there is something very important going on back there.

What is the best “memory organ”?

Von Neumann was eloquent on the problem of selecting a “memory organ,” for the brain. For his own electronic brain, he chose to use the eye as a model for a memory organ.

He thought the worst possible choice for a memory organ in the human brain would be a neuron.

As for the synapses, it is my impression that his hope for them, never fully expressed, was that they might turn out to work like AND and OR and NAND and NOR logic gates.

Could it be true in general, as von Neumann concluded in particular, that the eye might provide a superior model for a memory organ? It seems plausible. If we could understand the eye, we could probably understand the rest of the brain. It has been suggested from time to time that one might re-imagine the cerebral hemispheres as huge everted eyes, with each hemisphere a glorified, multiplied, stratified stack of retinas.

The von Neumann machine

When von Neumann began to work with the engineers who developed the digital computer, one of his first contributions was the suggestion that a memory store be allocated for programming.

This seems so astoundingly obvious now: That a computer should be able to remember what we want it to do. But before von Neumann suggested the concept of the stored program, each computer operation was laboriously set up in advance by the machine’s attendants.

The first stored programs were committed to punched paper tape, one of the simplest forms of memory. It was at this point that the digital computer became the modern instrument we now call, generically, a von Neumann Machine.

A fast, compact working memory, such as the common desktop computer’s reserve of DRAM chips, did not yet exist in 1945. The electrical working memory for ones and zeros consisted of devices that could assume and hold two states: these included manual toggle switches, electromechanical relays, and vacuum tube flip-flop circuits.

The early computerists knew they needed to invent a better memory, a better short-term storage medium, and they tried many different approaches before they arrived at the historically important (though now deeply obsolete) solution of ferrite cores.

Eye memory

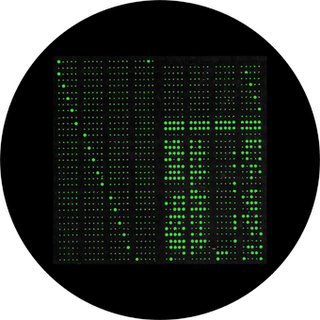

Early computer memories resembled eyes. The designers borrowed the basic concept of the TV camera. A binary “bit” representing a 1 or 0 was stored as a charge induced by a glowing phosphor at a certain geometrically defined point on the screen of a cathode ray tube. A bit could be set, scanned out or refreshed with a fast moving electron beam. The glowing bit’s “address” was its x,y coordinate position on the face of the tube.

At von Neumann’s urging, RCA’s labs tried to create a reliable computer memory from this Cartesian visual concept, but while they were still experimenting in Princeton an Englishman named Williams perfected the scheme. The Williams memory was quickly adopted by American computer makers, including IBM. It worked but it was not reliable.

A war story has it that when IBM introduced its first mainframe with a Williams eye-memory, at a formal news conference in New York, an eager photographer with a news camera walked up to the machine and popped a flashbulb. The flash blew the machine’s memory to kingdom come.

Core memory, invented in Boston by An Wang, then 26, and independently invented by two other men, quickly replaced the Williams eye-memory. Core memory stored ones and zeros as tiny magnets, and it could be layered in three dimensions, rather than two. It should be noted that these early room-sized computers were each about as powerful as a modern pocket calculator.

It interests me that when the electronic computer was first developed, the designer very quickly visited the concept of an eye memory. When Nature got started on the same problem, brain building, it may well have started at the same place.

A primitive eye was perhaps the vertebrate brain’s earliest prototype. Such an eye might freeze an incoming image for a few milliseconds in order to extract useful information from it. A memory, however short-lived, is a memory.

It is easy to see that a photoreceptor might serve wonderfully as a recording machine, because light induces decisive biochemical changes in it’s cache of rhodopsin. These changes linger – it takes time for rhodopsin to regenerate, or re-cock. So within a photoreceptor there are indeed persistent biochemical changes in response to a change in the outside world. This is a familiar textbook definition of memory – a biochemical change in response to experience. We are trained to ascribe such changes (in the very next breath) to the synapse. But let’s stay clear of the synapse for a bit and posit the idea that the earliest visual memory was written as a chemical and conformational change in a visual pigment such as rhodopsin. The idea will be developed in Chapter 12.

The bizarre anatomy of the human eye:

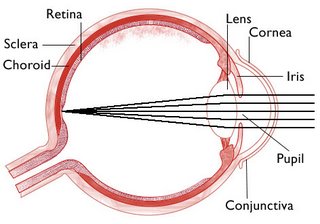

The eye is the only part of the brain we can look at without surgery. It is the best-examined component of the brain, and it is the last place in the brain where anything can happen at the speed of light.

Von Neumann, who essentially used a television camera as a “memory organ” in the digital computer, took a particular interest in eye anatomy.

In the course of his biological studies von Neumann noticed and remarked to his brother on something curious about the eye. He was puzzled by an anatomical fact that most biologists, then and now, brush aside as a mere happenstance or oddity of evolution.

Like all vertebrate eyes, the human eye looks backward, toward the eyesocket. The photoreceptive cells of the retina are wired from the front, so that it would seem that the business end of each retinal photoreceptor is pointed at the back wall of the eye.

Incoming light from the pupil must pass through a web of blood vessels and a fine network of nerve fibers, including three layers of cell bodies and a host of supporting and glial cells — before it reaches the eye’s photoreceptors.

At the tiny fovea, the nerves are layered aside, a peephole, but what about the rest of the retina? What about animals (most vertebrates) who have no fovea? The brain must somehow subtract all the intervening goo out of the clear picture we see of the world. How could the brain accomplish this wonderfully clarifying step? With a calculation? Von Neumann wondered about it. We should too.

Holographic memory

John von Neumann’s interest in eye-memories and eye anatomy has a modern reprise in computer technology. After decades of trying and a checkered history, the possibility persists that an optical mass storage system could be commercialized one day. A holographic memory system can store colossal amounts of data in three-dimensional blocks or films of photosensitive material.

The use of holographic memory in computers or game consoles, if it were to become a cost effective option, might help rescue from contempt a physiological concept that peaked around 1973 and then, for various reasons, faded away: the idea that memory in the human brain works like an optical hologram.

It probably doesn’t work quite that way – but the holographic brain guys flew very close to the truth once or twice. My opinion of course.

The idea of holographic memory actually gains some evolutionary credence from the curious, backward-looking structure of the human eye, as we shall see.

Had he lived Von Neumann might have been quite interested in holographic memory, for the hologram itself was conceived by a classmate of his, Dennis Gabor: Another Budapester, another genius.