Chapter one

One spike is enough.

There are two huge, persistent mysteries about the nerve impulse:

- How can it move so slowly and yet sustain quick thinking?

- How does it convey information to the brain?

The first question dates from 1849, the second from 1995. If we could guess the answers to these two interlocked questions, then we might be on our way, at long last, to understanding what the brain does.

When the Prussian genius Hermann von Helmholtz in 1849 measured for the first time the speed of a nerve impulse, he recorded in his lab notes and ultimately published, in 1850, a figure of 27 meters per second, or about 60 mph.

When the Prussian genius Hermann von Helmholtz in 1849 measured for the first time the speed of a nerve impulse, he recorded in his lab notes and ultimately published, in 1850, a figure of 27 meters per second, or about 60 mph.

Because in theory a solitary nerve impulse cannot convey any information — the von Helmholtz result rendered absurd, in advance, every theoretical model of the nervous system and brain that has been proposed since.

We are now 175 years deep in this absurdity. It seems our nerves are simply too slow to enable us to think as fast as we do. No theory that attempts to explain how the nervous system works has ever broken past the problem of slow conduction. Von Helmholtz happened to measure a slow nerve – faster myelinated nerves can conduct an impulse at 265 mph. But this is not, obviously, the speed of light.

The speed problem is not mentioned much. Granted, it is a weirdly subjective problem (What is a thought? How fast can it go?) but the brain is so big and its wiring is so very slow that it doesn’t make sense to ignore the issue.

The second major mystery — how nerves convey information — was thought to have been solved in 1926. It was concluded then that nerve impulse trains are frequency modulated. But as a result of studies on fast flying bats, the solution came unraveled in 1995, when it became evident that just one single nerve impulse, or spike, could somehow inform the brain. One spike is enough — and clearly there is no way to frequency modulate a single spike.

The bat and insect studies were accumulating in the literature from the early 1990s. Around 1995 a widening awareness of their impact on neuroscience finally crystallized into a sharp realization: We no longer understand how nerves communicate, and in fact we never did.

In the years since 1995-6 we have seen a flowering of fresh hypotheses about how a nerve might communicate, based on various rival and overlaid neural coding schemes — but there is no decisively proved explanation. It is a wide open question.

The question is explored in this chapter. One answer will be suggested in the next chapter, The Corduroy Neuron.

The All-or-None Principle

Suppose we start at the beginning. Here is a link to a superb animation of the vigorous electro-mechano-chemical process that underlies the nerve impulse; and to another site that shows how this process looks when it is monitored electrically, using an electrode positioned at a single point. This is the typical image we are presented of an action potential on a nerve fiber — frozen into a graph of voltage measured at a point versus time.

In addition, here is an explanation of the nerve impulse I found on the net, possibly a lecture from a high school biology course.

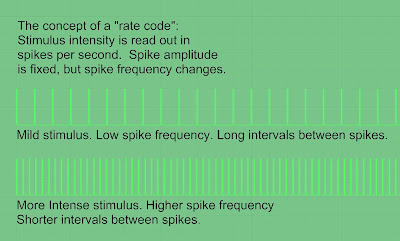

“All action potentials look the same in every neuron. The spike is always +120 mv and it always occurs in an all-or-none fashion, meaning that either a full spike is triggered, or nothing. There is no such thing as half a spike! This is called the all-or-none principle. An axon is like a gun: it either fires or it doesn’t. Thus, because action potentials are always the same size, a single action potential by itself cannot convey any unique information. Rather, information is carried not by individual action potentials, but by the firing rate of the neuron – the number of action potentials per unit time.”

When the author says “because action potentials are always the same size, a single action potential by itself cannot convey any unique information” he is essentially saying that the impulse is not – and cannot be — amplitude modulated.

To students who encounter the all-or-none impulse for the first time, it is counter-intuitive. We expect to see a signal pass on the nerve fiber that is in some way analogous to the signal applied to the nerve ending as a stimulus. The first thing you might think of is that the gradations of stimulus applied at one end of the neuron could produce a graded range of pulse heights. Tiny stimulus, tiny nerve impulse. Big stimulus (hot stove) big impulse.

But in the 1840s and 1850s, the first physiologists who were able to study the impulse semi-quantitatively, with refined galvanometers, could already see that the nerve impulse’s height is fixed and locked. Amplitude doesn’t vary. (These earliest observers included von Helmholtz and his close friend and colleague Emil du Bois Reymond, the discoverer of the nerve impulse. Du Bois Reymond was a Prussian with a French name, of Swiss and French descent.)

It seems to follow that since the nerve impulse’s amplitude is fixed, the gradations of stimulus intensity must be coded in some other way.

Firing rate?

Firing rate, it says here in italics, and this was the accepted view for about seven decades, up until about 1995. It is what I was taught, and what most biological scientists and physicians have been taught since Edgar Adrian launched the idea in the 1920s. It is worth reviewing because the concept of a firing rate code is still very much a part of the culture.

After WWI, at Cambridge, Adrian applied the then-new technology of the vacuum tube amplifier to the problem of measuring the electrical activity of the nerve. A one-tube triode amplifier could not provide enough gain, but by using a second tube to amplify the output of the first — and then a third tube to amplify the output of the second — Adrian achieved a total signal gain of 1850-fold. Thus equipped, he went hunting for the tiny impulses produced by a single sensory nerve ending.

In November, 1925, Adrian and his Swedish colleague, Yngve Zotterman, finally succeeded in isolating signals transmitted on a single afferent neuron from a frog muscle stretch receptor. To experimentally vary the intensity of stimulus, they used a series of progressively heavier weights to tension the muscle.

They did not have an oscilloscope. To display the amplified nerve signals, they used instead a capillary electrometer, a device in which a column of mercury elevators up and down as a function of applied voltage. They recorded the rise and fall of the mercury’s meniscus with a movie camera.

A firing rate code is what Adrian and Zotterman recorded on film and subsequently published in the April, 1926 Journal of Physiology. (Slow download.)

(Click image to enlarge, Back to return.)

(Click image to enlarge, Back to return.)

Here is the basic principle. A mild stimulus produces pulses that are spaced well apart. An intense stimulus produces closely spaced pulses. To get an intuitive feel for rate coding, one can hook the output of the amplifier to headphones. A mild stimulus produces a pop-pop-pop. An intensifying stimulus accelerates the distinct pops to popity-pops and then, ultimately, machine gun fire. In Adrian’s 1926 papers he reported pulse frequencies ranging from resting activity of 5 impulses per second up to stimulated neurons firing 200 spikes per second.

The rate code is not the headline topic in Adrian & Zotterman’s paper. Adrian seems unsurprised by it. He explains it in just a few paragraphs, beginning on page 157, as a function of the neuron’s refractory period.

As an undergraduate he had done experiments to reconfirm the all-or-none principle, so he knew well that amplitude modulation was out of the question. He remarks on page 165 that given the fixed amplitude of the all-or-none nerve impulses, “it has often been pointed out” that frequency modulation was one of just two possibilities for communicating graded (analog) information to the central nervous system. The other recognized option in 1926 was the successive or additive recruitment of other nerve fibers. (A modern variation on this long lost alternative scheme, in which multiple channels were used to communicate analog information, is discussed in Chapter 2.)

Apparently in Adrian’s view, he did not discover or invent the notion of a frequency modulated neuron. He thought he had confirmed it. Adaptation appears to have interested Adrian more than the firing rate code. The rate-coded pulse streams were, in essence, early reports. Over time, given a constant (boring) stimulus, the nerve adapted and fired less frequently. Some nerves adapt very rapidly. In his 1928 book, The Nature of Sensation, he remarks on how important it was, in detecting the rate code, that they chose to study a specialized receptor in the frog muscle. This receptor is slow to adapt, so they had a 20 second window of fairly abundant firing.

In some single nerves, he remarks, he might have seen only 1 to 3 impulses go by before the nerve adapted. This would have made it difficult to reach or support with sufficient data his conclusion that firing rate is a function of stimulus intensity. The worst experimental choice would have been a skin receptor, which he says might fire 10 impulses in a tenth of a second, and then adapt. In general, adaptation made it difficult for Adrian to demonstrate that the neuron is frequency modulated, but he viewed it as an interesting phenomenon in its own right.

At right is an image of a 1925 Marconi DE 6 vacuum tube, standing on its four pins. Edgar Adrian used a trio of DE 5b triodes in his 3-stage amplifier in 1925 and 1926. Photo courtesy of the John D. Jenkins Collection.

At right is an image of a 1925 Marconi DE 6 vacuum tube, standing on its four pins. Edgar Adrian used a trio of DE 5b triodes in his 3-stage amplifier in 1925 and 1926. Photo courtesy of the John D. Jenkins Collection.

From an historical point of view, the rate code Adrian imputed to the amplified nerve impulses he and Zotterman saw became his great legacy: It was first reported by Edgar Adrian in 1926, and it was taught to subsequent generations for seven decades, that the neuron is a frequency-modulated device. A low intensity stimulus was encoded as a low frequency pulse train. A higher stimulus intensity was encoded as a higher frequency pulse train. In all those years, it doesn’t seem to have occurred to anyone that Adrian’s results might be — or should be — interpreted in any other way.

Implications

According to Adrian’s hypothesis, the frequency of the pulse train is analogous to the intensity of the stimulus. This means at least two spikes must be launched up the nerve cell to convey a nugget of information to the brain. The interval of time elapsed between the firing of the first spike and firing of the second spike is critical. A short interval reports a high intensity stimulus. A long interval reports a low intensity stimulus.

How is the code decoded? At the end of the axon there is simply another nerve. In the dendrites of this next nerve in line, the incoming pulses will be accumulated — summed to a certain voltage level. If the incoming impulses arrive frequently, the voltage will rise. If incoming impulses arrive only occasionally, the voltage will remain flat or slide downward. The input end of the next nerve is essentially a frequency to voltage converter.

However, this next neuron will receive impulses from many different nerves. As the incoming impulses are pooled, melded, specific information about their anatomical points of origin is lost. So some processed information about a collection of stimuli has progressed toward the brain — but there is no way for the brain to map where each of the original stimuli came from, nor to guess which stimulus produced the strongest (most urgent) signal.

In other words you must add, to the dead slow transmission speed of the impulses, the waiting time between impulses, and the processing time it takes to extract the analog information from successive time intervals. Plus some sort of feedback process, perhaps, to help the brain reconstruct a sense of the anatomical source of the signal. If the sensory stimulus is changing, one interval will not be enough. You will have to measure a succession of them to describe the change to the brain, using a buffer to create a moving average. Then, for a sense of the system’s (hopeless) overall efficiency, multiply the transmission time and computational overhead by the number of nerves we happen to have. About 100 billion.

In other words you must add, to the dead slow transmission speed of the impulses, the waiting time between impulses, and the processing time it takes to extract the analog information from successive time intervals. Plus some sort of feedback process, perhaps, to help the brain reconstruct a sense of the anatomical source of the signal. If the sensory stimulus is changing, one interval will not be enough. You will have to measure a succession of them to describe the change to the brain, using a buffer to create a moving average. Then, for a sense of the system’s (hopeless) overall efficiency, multiply the transmission time and computational overhead by the number of nerves we happen to have. About 100 billion.

How do you like it so far?

Maybe not the way you or I would design the nervous system, but this was for many years and to some degree it still is the textbook basis of the science of neurophysiology. All-or-none spikes. Intervals as information.

Edgar Adrian won the Nobel for his work. He was made a Baron. He was an elegant man with a powerful intellect who liked to ride a bicycle to the lab. His work was so solid, and so widely accepted, that it was not until the 1990s that anyone went back to take a second look. And, as dramaturgy might require, on closer examination it turned out Adrian was wrong. His facts were in good order but his hypothesis — the idea that stimulus intensity is encoded in the neuron’s firing rate – isn’t true.

How fast can a bat make up its mind?

With its refined sonar system, a speeding bat can detect an obstruction and swerve to avoid it in a split second. Experiments show the bat has time to process only one spike, or nerve impulse, in the available time window. In this type of experiment the sonar ping and the bat’s sudden evasion establish an allowable time window for the transmission of nerve impulses along any and all transmission lines. The experiment does not imply that a single spike on a single neuron is responsible for the bat’s quick decision. It simply concludes that there is only enough time available to transmit, along whichever and however many neurons, a single spike.

This is remarkable, but it defies explanation in terms of Adrian’s long established (circa 1926) idea that the nervous system encodes sensory information as a function of time intervals between spikes. It takes two spikes to open and close a time interval. For the hurrying bat, using just one spike (on a given neuron) to make its decision, there exists no apparent interval to measure.

One spike is enough

Somehow, a single spike is conveying information to the brain. This surprising news was revealed not only in bat studies, but also in insects and in other animal studies reported beginning in the early 1990s. The results are summarized in the now classic book, “Spikes,” by Fred Rieke et al, and it is here that you can find a wonderful, almost archaeological re-examination of the original Adrian apparatus and results.

Note that the results of the bat experiments were not just an oddment of zoology.

An existential chasm was opening at the feet of neuroscience. At a very late date, the mid-1990s, the fundamentals were being called into question. The nerve impulse is not amplitude modulated, an ancient given, okay. But now we learn streams of impulses are not frequency modulated, either. What’s left? Phase modulation? How does the neuron actually work? What does it do?

Existential chasms don’t last very long. Nature abhors them. Science abhors them. Band-Aids appear, are passed round. Solutions are wrestled into place, continuity restored.

So in the mid-90s, the old rate code lost credibility. The book, “Spikes” is an effort to answer the question, if the firing rate code has failed us, then what is the real code? The question of course takes a huge step toward its own answer, in that it assumes there must be an alternative code.

The assumption is logically flawed because it excludes the possibility that no code exists. Because from inspection, it would indeed seem there might be no code at all. A single spike conveys information. The right question to ask is just, “How?”

The workaround

To reiterate, it takes two spikes to open and close a time interval. For the hurrying bat just one spike is available to inform its decision. This shows that Adrian’s concept of a rate code, in which information is conveyed by a frequency modulated stream of impulses, cannot explain the bat’s superquick maneuver. One spike does not constitute a stream.

If you take the experimental result at face value, then that one spike must be conveying information to the brain. But in 1995, almost no one wanted to take the experimental result at face value, or to even confront this as a possibility.

There was an easy workaround. The trick was to remark that for the hurrying bat, with just one spike in passage, there exists no apparent interval to measure. Implying the existence, offstage left, of a second spike or reference point.

Here is the origin of a big, comforting, and perfectly circular assumption. It was and is reasoned that one spike is not enough, since this (actual, but surely only apparent) result would be impossible to fit into the logical context of contemporary neuroscience. Therefore we are supposed to assume there exists a second spike somewhere, or some sort of reference peg.

Given this assumption one can, in many and various ways, try to salvage the idea of a time interval as information.

For example, a measurable (by the brain) interval might exist between the detected spike and some other spike coursing down some other neuron. An interval or relationship might exist between the detected spike and some pre-set condition – a cock and fire model.

The injection of a synch pulse

A similar, by now quite popular alternative is the idea, familiar from electronic pulse circuit engineering, that there is a reference pulse being injected into the system. An injected reference pulse is sometimes called a clocking or synch pulse. The synch pulse is generated periodically (that is, with perfect periodicity) by an oscillator. In electronic pulse circuits the oscillator is stabilized with a quartz crystal, so that it keeps perfect time.

In this scheme of things, the bat bases its decision to swerve on a spike that only seems solitary, to the experimenter — but really isn’t alone after all — because it appears, at some measurable time interval, before or after the clockwork-regular appearance of a synch pulse emitted by the bat’s system clock.

Whew.

So there are really two pulses after all, the spike and the synch. And an interval therefore exists between them.

This is a nice idea. It was perhaps most eloquently expressed by John Hopfield, in a 1995 paper modeling the olfactory system.

But it is hard not to notice that what’s actually happened here is: Ill fortune has snatched away from us the good old Adrian firing-rate code, in which the interval between successive nerve impulses was everything. And yet here it is back again, in slightly different form – an interval to be measured between two pulses. One pulse is produced by a nerve. The other pulse is produced by a hypothesis.

In this way, the laboratory results of 70-odd years of neuroscience — momentarily at risk to that speeding bat — have been preserved almost intact.

The application of the principles of pulse circuit timing to the nervous system is now quite fashionable, and experiments have confirmed the basic idea. One hears the confident cliché; “it’s all in the timing.”

I find I don’t believe this stuff. I don’t doubt the experimental observations are accurate. I just think they can probably be interpreted in some more interesting way.

The idea of building clocks into the nervous system has always been attractive. In this most current iteration of the idea, the clocked system has a nice economy of motion. Each interval between pulses has acquired more importance. In the old days, someone (the investigator or the brain) could extract the meaning of a firing rate code by averaging the firing frequency over time.

The time-averaging process burned up a lot of time in an already slow transmission system. In the new, post 1995 paradigm, the information communicated by just a single time interval, between two impulses, can be noted and acted upon. Averaging is over.

So the new neural codes appear to involve less signal processing than the old Adrian firing rate code, and in this sense they seem to have made us, theoretically, smarter morons. Any two successive impulses can convey meaning to the brain. Even a single impulse, provided it is paired with a clock pulse, can convey meaning to the brain. Good.

The trouble is, and has always been, that the single impulse is only corking along at 265 mph, max.

How to think slowly

Neurons are not wires. In a silver wire, electricity moves at the speed of light. In a fast nerve axon, a nerve impulse moves at a top speed near 265 mph but the average conduction speed is half of that. In the body and brain, billions of these impulses are creeping through complicated networks of billions of cells. So the rhetorical question is and remains: How can we possibly think as fast as we do?

One stock answer is complication. The nervous system and brain are very complicated, voila. Somehow complication and cellular diversity are supposed to make up for the dead-slow conduction of the nerve impulse. One could argue that complication would only aggravate the problem, but never mind, there are other solutions.

One is parallel architecture. This is probably the most promising type of explanation. Yes, a bit-parallel computer could be very slow yet very smart. But note that parallel encoding requires synchronization and that, in turn, requires supremely accurate clocking. A temperature stabilized quartz crystal.

In fact most answers to The Large Questions about how the nervous system might actually work seem to converge, lately, on solutions that require the physiological equivalent of a quartz crystal, um, a device we don’t often come across in biochemistry. There exist nevertheless lots of biological clocks and cycles in nature, so maybe there is something to it.

Or maybe not. Timing theories are admirable constructs, intellectually, but they carry a considerable burden of clocking and processing overhead.

To be fair…

I am inclined to doubt that a neural code exists, and so the advocacy of some one neural coding hypothesis as supreme among the many different coding hypotheses strikes me as a futile exercise.

However, I am as willing to be wrong as the next person, and one should try to sample the whole range of these ideas and controversies. Withal, it is quite an industry. Here are some access points to the literature.

Try Gerstner and Kistler, here, and in their 2002 book, here. Codes are imputed to multiple, as well as single neurons, and here is an interesting review that talks about these approaches. Here is a slide presentation that identifies various points of view. And here is a site with some good comparative graphic images of various coding schemes.

In addition, here are two samplings of opinion about neural codes, taken a decade apart. The first was written cc 1995, when the failure of the Adrian’s firing rate code was still fresh news. The second appeared nine years later in the popular magazine Discover in late 2004.

There still doesn’t seem to be any clear progress. A definitive alternative to Adrian’s rate code is still unfound — a vacuum persists, a hole that suddenly popped open in our understanding of what nerves do.

According to Christof Koch of Caltech, “it’s difficult to rule out any coding scheme at this time.”

The possibility that no code exists is not mentioned.

There is no very satisfying answer.

Sooner or later it will strike you that neuroscience, formerly neurophysiology, has failed to explain the neuron.

It is a field that was founded in the 18th and 19th centuries, and much elaborated in the 20th, upon the careful measurement of the electrical properties of nerves and on the application of biophysics and electrochemical techniques to studies of ion movements through the membrane.

Perhaps for this reason this science still has a cultural bias, a standing intuition that nerves are to be understood as physical devices outfitted with interesting biochemical accessories. In fact nerves are profoundly, completely, utterly biochemical. What else could they be?

It would be my guess that a better understanding of the biochemical structure of ion channels will point to a more biologically realistic solution — a new and better picture of how the neuron works. From this would surely follow a more realistic picture of how the brain works.

Frances M. Ashcroft’s book, Ion Channels and Disease is a current and broad survey of ion channels. The key to the neuron problem is probably in her book somewhere, or is referenced in it, and is waiting to be discovered.

I would pay particular attention to any type of evidence for linkage, structure or signaling between “individual” ion channels. Linkage between discrete trans-membrane ion channels could create a longitudinal channel running the length of the nerve, probably many of them. A multi-channel axon – a cable rather than a wire — would be one possible solution to the persistent mystery of how a single all-or-none impulse can be freighted with graded (analog) information.

How important was that speeding bat?

The radical notion that a single nerve impulse can convey information to the brain arises directly from the bat and insect experiments begun in the early 1990s. It would not be surprising if those experiments turn out to be the Michelson-Morley of neuroscience.

If you explain the bat’s solitary, yet meaningful impulse by injecting a synch pulse, or by invoking one of the more elaborate hypotheses based on clocks and timing, then you can, no doubt, explain away the problem.

If you simply reserve judgment, however, and refuse to exclude the possibility that the experimental result might be taken at face value — then you can keep in clear view what is probably one of the pivotal discoveries in human history:

One spike somehow conveys, all the way to the brain, information that is analogous to the intensity of the stimulus.

This changes everything. It opens up, for the first time since 1849 really, the possibility that the brain is a machine we can understand.