photo courtesy of Guy Meagher

Let’s suppose the brain devolved from and still works rather like an eye. What structure in the brain could upload and store the information contained in a single photoreceptor? What is the meaning of arborization?

Chapter thirteen

The mind as an eye

The natural history of visual memory

It has been at at least 65 years since anyone seriously thought about human visual memory as a store of images, rather than as a repository for extracted features. So in asking how images might be stored and remembered, we are moving sharply away from the standard story. The issue was argued in Chapter 12.

But there were eyes long before there were images, and probably there was a visual memory as well.

The eye matured before the brain, and let’s guess that in the early going, the eye alone was functioning as a brain sufficient unto itself. The eye had some built-in capacity to sample, shift and hold an input, maybe by storing just one single frame snatch, in order to notice, through comparison or overlay, what had changed in the state of the world in the interval before the next frame snatch arrived. We are using the word “input” rather than “image”, since for a directional eye, the input would simply be a ray of light.

There was not enough hardware in the retina to store a long succession of past impressions, but memory proved useful, and so over time the brain evolved as a storage warehouse — a separate place to stack the incoming streams of 3-dimensional snapshots from the eyes. This does not necessarily mean 3-D images, although it could. In every case it means data captured in depth along the z-axis of each photoreceptor’s outer segment.

In this version of history, the brain evolved as a child of the eye, rather than the other way around. This did not have to happen – this offloading of visual memory and visual logic to a brain. An alternative path would be an extreme local elaboration of the eye.

Photo by Jean-Michel Peers

Birds, with their remarkable intelligence, may have taken this other path, for in birds it is the eyes, rather than the cerebral hemispheres, that have become exaggerated structures that fill the skull.

What is novel here? The brain and the eye are the same thing, and the tradeoff between these two distinct yet perfectly unified components has long been remarked. The eye is typically presented as “part of the brain,” but one can just as easily say the brain is part of the eye. The intelligent eye, notably in the frog, was the subject of one of the most cited papers in history, “What the frog’s eye tells the frog’s brain.” by Jerome Littvin et al. (Slow download). There is a well worn classroom wise crack about the retina vs. the brain, to wit, the dumber the animal the smarter its retina. The idea that the eye has an intelligence — the equivalent of logic circuits — is long accepted.

What’s new here is the idea that the eye has or once had a memory — and that the photoreceptors were the first memory organs.

Granted this supposition, one can try to guess the rest: A retinal photoreceptor memory starts out as a feature of an evolving photoreceptor, but it quickly runs out of storage space. When this memory is made remote from the photoreceptor, off-loaded into the brain, the photoreceptor mechanism still specifies in detail the structure and mechanics of visual memory. This is because the visual memory in the brain must mimic the retina of the eye in order to surface a remembered image into our conscious thoughts.

In other words, the visual memory organ of a modern brain is functionally analogous to a photoreceptor. Moreover, the integration of a memory is analogous to the integration of an image formed by light on the retina.

Early memory

How is visual memory recorded? It is usually assumed that the eye transmits to the brain streams of nerve impulses that report samplings of the present moment. It is the brain’s job to make a memory from these real-time signals.

But let’s suppose the eye has its own separate and distinct memory. If the eye is a recording machine, then it is actually a memory – an instant replay rather than a live broadcast — that is being delivered via the optic nerve to the brain.

This type of memory is called sensory memory. It is basically a buffer memory associated with a sensory organ or, to narrow it down for this hypothesis, a memory stored within a sensory cell. Its purpose in a photoreceptor would be to compare two moments in time – the Now vs. the Just Now. Suppose the image presented for Now is a shark. If you could compare it with a remembered image snapped Just Now of the same shark, you would know whether the shark were coming toward you or going away. For a primitive animal, the ability to compare two visual impressions slightly separated in time would confer a huge survival benefit.

It is easy to see that a photoreceptor cell might serve wonderfully as a recording machine, because light induces decisive biochemical changes in a disk’s cache of rhodopsin. These changes linger – it takes time for rhodopsin to regenerate, or re-cock.

So inside a rod or cone there are indeed persistent biochemical changes in response to a change in the world. This is a familiar textbook definition of memory – a biochemical change in response to experience. We are trained to ascribe such changes (in the very next breath) to the synapse. But let’s stay clear of the synapse for once and experiment with the idea that the earliest visual memory was written as a chemical and conformational change in the rhodopsin arrayed in a photoreceptor’s disks. Note that a rod might comprise 1500 disks, so there is plenty of excess disk capacity that might be used for visual memory storage.

A photoreceptor memory would be “early memory” in two senses. In a modern animal it could be the first record of an image from the world. In a very primitive but sighted animal it could constitute the whole memory – evolved long before the rest of the brain augmented the eye.

The role of standing waves in photoreceptor memory

The “thing to be remembered” is not just a flat pixel — not just the presence or absence of light at the outer segment of a photoreceptor. It is the presence or absence of light at specific points along the z-axis of the photoreceptor, captured at two different times, that could make these samplings truly meaningful.

Beads of light are stacked inside the outer segment. They stretch out and contract in response to changes in the world. It is the pattern of beads, or the information that can be extracted from this pattern, that constitutes a useful memory.

Because of the way information is detected and recorded from a standing wave, standing wave theorists have proposed that the photoreceptor might capture color as a peak-to-peak or null-to-null or peak-to-null distance along the z-axis of the photoreceptor. To review the concept of photoreceptors as wave detectors, see Chapter 8, Rods and Cones as Wave Detectors. The chapter includes references to the unexpected discoveries of action potentials in human rods and, subsequently, in human cones.

The structure of a photoreceptor memory

The structure of a photoreceptor memory

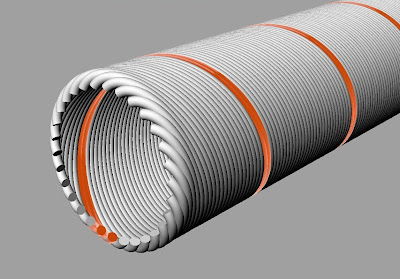

This photoreceptor is an example of the multichannel neuron first presented in Chapter 2 on The Corduroy Neuron.

Below is a schematic representation of a multichannel photoreceptor’s outer segment. It could be a rod or a cone. A single helical output channel is marked in orange on the cell membrane – one of hundreds. These 300 odd channels wrapped around the outer segment are roughly analogous to the dendrites of a conventional neuron.  Illustrated below is the same outer segment with the membrane subtracted. The single channel is left in view to indicate how the disks relate to an output channel.

Illustrated below is the same outer segment with the membrane subtracted. The single channel is left in view to indicate how the disks relate to an output channel.  How to connect the helix to the disk or disks? One could imagine the assignment of a single channel to a single disk. One might also visualize an array of disks, positioned periodically to capture a particular wavelength — reporting that wavelength via a single output channel. An initial exposure to a standing wave will seriously photobleach only a few disks marking the intensity peaks of the standing wave. In this figure, photobleached disks located at intensity peaks are indicated in yellow.

How to connect the helix to the disk or disks? One could imagine the assignment of a single channel to a single disk. One might also visualize an array of disks, positioned periodically to capture a particular wavelength — reporting that wavelength via a single output channel. An initial exposure to a standing wave will seriously photobleach only a few disks marking the intensity peaks of the standing wave. In this figure, photobleached disks located at intensity peaks are indicated in yellow.

The next, novel exposure to light may bring a signal of a different wavelength, and thus make an impression on a different set of unbleached disks.

The next, novel exposure to light may bring a signal of a different wavelength, and thus make an impression on a different set of unbleached disks.

Therefore a linear peak-to-peak measurement could also be made along the z-axis between a newly impressed wavelength pattern (in green, below) and a persistently bleached set of disks that were excited by light received 1 second ago – leftover hot points.

In effect, inside a rod or cone, one can make a linear, z-axis measurement between the light of the present and the light of the past. In the simplest case, this measured distance would tell the creature about a change in wavelength (color). Recall in detail the radar eye suggested in Chapter 9 on Eye Evolution. A continuous change in color tracks the passage of a target object across the animal’s field of view. The delta wavelength, frame to frame, tells the animal which way the target is moving. If the change is clocked (if indeed the primitive eye had a built-in clock) the animal could sense how fast the target is moving.

In effect, inside a rod or cone, one can make a linear, z-axis measurement between the light of the present and the light of the past. In the simplest case, this measured distance would tell the creature about a change in wavelength (color). Recall in detail the radar eye suggested in Chapter 9 on Eye Evolution. A continuous change in color tracks the passage of a target object across the animal’s field of view. The delta wavelength, frame to frame, tells the animal which way the target is moving. If the change is clocked (if indeed the primitive eye had a built-in clock) the animal could sense how fast the target is moving.

A z-axis measurement could also be made between present and past intensity peaks out at the proximal end of the outer segment. The primitive animal observes the change in a standing wave pattern and mutters to itself: getting redder, getting brighter, and getting closer.

It would certainly take a clock to make this little memory machine facile. Interestingly the retinal ganglion cells have been shown to produce circadian signals, so a clock is a perfectly plausible component inside the retina. Note too that the pineal gland, which is a biological clock, essentially uses benighted photoreceptor cells as its escapement or, if you like, its ticker.

In a truly primitive system, however, the clocking of a frame shift could simply be accomplished by a change in the incoming light.

This is a most rudimentary sort of memory machine, in which rhodopsin is the recording medium. There are more elaborate ways to imagine such a device. For example, one could segment the rod into compartments, longitudinally, and assign to each compartment in rotation a captured moment in time — creating, in effect, a film strip.

But whatever sort of mechanism might be spun from the idea, the basic principle is constant. Disks are bleached by light of a particular wavelength at z-axis positions that are defined by that wavelength.

A change in these z-axis positions induced by a change in the incoming light (e.g., in its position or wavelength) can be detected. This is because the initial wavelength is preserved — marked and bracketed in effect — by persisting biochemical changes in the rhodopsin of the originally affected disks.

Transducin reads rhodopsin

There is a nice biochemical piste or trail of clues to follow here, since transducin, a G protein, is next in line after rhodopsin in the cone, and in the nocturnal biochemistry of the rod. If indeed the rod operates in broad daylight as a wave detector, it seems likely transducin or a related G protein is next to tumble after rhodopsin. In other words, transducin “reads” the light induced change in rhodopsin, day or night. One must ask where that biochemical trail might lead in a photoreceptor — rod or cone –that exhibits nerve impulses as conceived in Chapter 3.

Rhodopsin is embedded in disk membrane. Transducin is shown anchored below it. From a Wikipedia illustration created by Devon Ryan.

The alpha subunit of rod transducin (in red) is only 79% homologous to that of transducin found in cones, which are certainly daylight receptors. But the rod and cone alpha subunits work the same way and are in fact interchangeable. The alpha subunit is the business end of transducin and the place to start. Downstream components in this cascade could mark a distinction between day and night chemistry, and between the lumped analog response described in texts and covert or measurable nerve impulses reporting to the brain on specific standing wave peak positions.

Transducin is intriguing for another reason. There is no obvious complement or antipodes to rhodopsin in the brain, in the visual cortex for example. But one might hope to discover, as a hint at the memory process, an analogy between the G protein step in the photoreceptor and a G protein coupled step far downstream, in a destination neuron deep in the visual memory of the brain.

G proteins are implicated in certain memory hypotheses I find impossible to credit — but it certainly makes sense that G proteins could be involved in remembering. Memory is hidden. G proteins are ubiquitous. From the movies, we have learned the best place to hide is in a huge crowd.

Why a brain?

Doubtless evolution could do a lot with the simple standing wave principle proposed for photoreceptor memory, and perhaps our own eyes can remember more than just one or two frames. But there are clear physical limits to the storage capacity of a photoreceptor memory based on rhodopsin. Hence, perhaps, the evolutionary pressure to create a brain memory as an annex to the eye.

In any event, the trick to modeling an eye memory, the essential quality that makes it possible to even conceive of such a thing — is multiple output channels that can report on light intensity, or pick a peak, at each photoreceptor disk. These multiple channels constitute the numbered increments of a z-axis yardstick.

At this point we can imagine the form of a visual memory to be remembered in the brain. It is a representation in the brain of a pattern of bleached disks — transmitted via the multichannel neurons of the optic nerve from not just one photoreceptor, but from 125 million parallel photoreceptors comprising a whole retina. This is a reprise of Jerome K. Jerome’s cherished 1889 notion of the retina of memory.

Before we venture into a guess at how this might work, suppose we jump from the photoreceptor all the way to the other end of the memory process, and ask what constitutes a memory stored in the brain in a multichannel nervous system.

The late E. Roy John neatly defined in his book Mechanisms of Memory (Academic Press, 1967), two different ways to model the spatial distribution of memory. Memory might be understood as:

1) “a thing in a place” or

2) “a process in a population”

In 1967, many neuroscientists viewed memory as “a thing in a place,” that is, a single neuron or pathway – a so-called labeled-line memory. Hebb’s 1948 model of memory as a grooved-in pathway was still taken literally. Hubel and Wiesel were in their heyday. One could single out with a fine probe a neuron in the visual cortex — and attribute to it the power to distinguish a specific angle of orientation for an object in the animal’s field of view.

If it were actually true, as it then appeared, that a neuron could be somehow pre-configured to watch for a specific edge angle, such as 41 degrees, then labeled-line memory must have seemed to make excellent sense. The concept of memory as a “reverberating circuit,” roughly analogous to the recirculating memory loops of early digital computers, was still an accepted idea. Another model represented memory as a specific line selected by a telephone switchboard. Connectionism was not yet a movement. It would be 15 years before Hopfield’s paper describing a neural network as a content addressable memory appeared in PNAS.

In a conversation in the late 1980s, E. Roy John referred to the believers in memory as a thing in a place as “the labeled-line guys.” He thought they were utterly wrong. He emphatically preferred to model the memory as a “process in a population.” By “process” he meant a statistical process.

E. Roy John was a brilliant iconoclast, and at the time he wrote that book he was way out in front — and largely alone. But today, 57 years along, cognitive science absolutely agrees with his notion of memory as “a process in a population” as exemplified by neural nets. The idea that vertebrate memory might be modeled as “a thing in a place” seems to have receded with the ascent of neural nets, but the conflict between “place” and “process” still has echoes today in models of sensory systems. Here’s one example pertaining to taste, and another on itching.

The prevalent view of vertebrate memory is as a process in a population, but it is believed that changes at the synapses (which are places) influence the pattern of that process.

The atom of memory

Alors. Maybe it’s time for another swing of the pendulum, another total reversal.

In a nervous system constructed with multichannel neurons, memory turns out to be “a thing in a place.” It is not remotely “a process in a population.”

The irreducible minimum kernel of memory is a single channel number, 17 for example. This is a comfort. The atom of memory is a channel number. It is embodied as a numbered slit, a longitudinal channel the length of an axon — one of maybe 300 such longitudinal slits.

How are the slits assigned a “number?” Either by position in a sequence or by a biochemical marker, and ideally by both. We have to belabor this because, after all, the nerve has no real number sense. It computes by analogy, for example, by comparing analog levels. An analogy must be made to something, some physical property. In other words there must be a physical basis for a numberline, and for marking and manipulating “numbers”, something like a child stacking cookies to learn how to count.

If a number is assigned to a channel by its relative position, then this means the number follows from sequence. You can arrive at such a number by performing a count. Sequence is excellent, a natural way to create linear order — an arrangement. In biology, and especially in proteins and nucleic acids, sequence is crucial. A sequence (and the shape that follows from it) is the way in which biological information is usually stored. One channel follows another counting around the cylinder of the axon — and is therefore higher or lower in number.

We have used sequencing to associate a certain input stimulus magnitude (say, 5 mV) with a particular channel, using a model mechanism analogous to a rotating commutator arm. The sequence is directional, ascending or descending, depending on the direction of the arm’s movement, clockwise or counterclockwise.

If a channel number is associated with a biochemical marker, then on what basis? Angular position on the axon, as indicated by a commutator arm? That’s one possibility.

Another possibility is physical length – a linear distance around the axon cylinder from channel 0 to channel 21, for example. Or, if the channels are helical, the distance to a particular channel can be captured with a measurement taken linearly along the axon’s longitudinal axis.

How to create biochemical channel markers

If the ascending scale of channel numbers is linear, then there is no clear difference in channel spacing — only a sequence — so the distance from one channel to another is best read as a distance from zero in a particular direction. The distance from channel to channel tells us nothing.

However, suppose the channels are arrayed logarithmically, as in a shell. Visualize this section through a conch as a section through the neuron at the commutator. Note that each ascending channel is spaced further apart than the one before, and the sequence has a built-in direction. In this case the physical interval between channels increases with channel number, so each channel can be uniquely identified by its distance to an adjacent channel.

The first two channels, 1 and 2, are very close together. The last two channels, 299 and 300, are spaced wide apart The interchannel distance makes each channel physically unique.

In modeling these systems you could probably create or cleave a peptide to length, in order to precisely match each interchannel distance with a biochemical marker molecule. In other words, the biochemical channel markers could be derived from, or replicates of, strutlike and cytoskeletal proteins bridging the channels at the axon terminal. This would be a way to produce 299 unique chemical identifiers, each corresponding to and in some way associated with a specific channel.

When an action potential arrives at the terminus of a particular numbered channel, let its unique, numbered peptide curl up, snap small — and package it in or on a synaptic vesicle.

Peptides are the handiest and perhaps least obnoxious candidate markers, but there are other possiblities, including I should imagine coding and non-coding RNAs. One thinks of RNA because the passage of the contents of synaptic vesicles from cell to cell is oddly reminiscent of viral infection.

In any event, in this model, pools of synaptic vesicles (like nerve impulses) appear to be identical from one synapse to another, but they might not be. If markers are being used, then the vesicles in a given pool are unique to a specific channel — marked and numbered. It is the number, not the vesicles, that matters. The intercellular transmission medium, which is a neurotransmitter, is not the message. It is just a carrier. The message is a channel number.

What is the value to the model of unique biochemical markers arising at and tagging each channel with a number? If each synapse represents a numbered channel connection, then the distinction between channels is kept distinct physically, at least at the nerve’s terminals. So why have markers?

The possibility that biochemical channel markers exist is basically a modeling convenience. If they do exist, then they are a chemical form of memory. If the biochemical markers are embodied as short RNAs, then their promise grows larger.

Instant memory

Note that in this model, there is no conversion step involved in making a memory. In other words, streams of nerve impulses are not somehow decoded or processed in order extract a useful fact to be converted into a memory. The channel number exists from the instant a stimulus is first sensed. Sensing and memorizing are the same thing. Every action potential in motion is, in essence, a memory in passage.

The smallest element of a memory in storage is a single numbered channel. In effect a memory is a number, 17 for example, that is just sitting there, waiting to be fired back into the nervous system. But where is “there”?

Let’s quickly preview a visual memory model based on the multichannel neuron. In the brain, the memory store for a single photoreceptor can be drawn as a treelike structure. Each memorized channel number has an address, and therefore “a place.” In this metaphor, the place is a twig in a treetop.

Twig memory

A single number in storage is just one element of one 3D pixel. We have estimated that a 3D pixel, that is, a bleached disk pattern from a photoreceptor, could be fully characterized with as few as 3 datapoints.

Click to enlarge, Back to return

Three datapoints, which are bleached disks, should be enough to capture the color, intensity and spatial phase of incoming light at a point on the retina — at a moment in time.

This photoreceptor disk pattern is represented in the brain by channel numbers and stored as twigs of memory. The twigs comprise 3 associated channels, or 3 numbers in storage, unfired. In this example the channels are 2, 8 and 51.

A nearby twig in the same treetop stores another, different 3D pixel pattern recorded by the same photoreceptor. But that pixel (e.g. 2, 14, 75) will have been captured at a different time, perhaps months earlier or days later.

Memory is not a process in a population. It is a thing in a place – an analog number (or in this case, a trio of numbers) parked at a unique address.

The retina of memory

If the memory warehouse for a single photoreceptor is a treeful of twigs, then the brain’s retina of memory is a forest. The retina of the eye maps to the retina of memory. In other words, each photoreceptor of the eye’s retina maps to a tree in the forest. This forest is the brain’s recording medium, its film.

The retina of the eye maps to the retina of memory. In other words, each photoreceptor of the eye’s retina maps to a tree in the forest. This forest is the brain’s recording medium, its film.

A stored snapshot selectively extracted from the forest comprises as many as 125 million twigs, or 3D pixels. It depicts both a literal snapshot image (from the central fovea, in the heart of the forest) and a back focal plane interference pattern (from the surround).

Here is a literal image:

And here is the interference pattern from the surround — a different way to encode the same image.

Refer to Chapter 6, What Does a Memory Look Like. Any tiny patch of the interference pattern can be used to recreate the whole of the literal image (a duck in this example) by performing a Fourier transform of the patch. In this sense, the visual memory of an image is stored “everywhere,” per Karl Lashley, even though the individual 3D pixels that comprise the interference pattern are stored as discrete numbers with clearly defined addresses.

Stored Memory is static, a thing in a place, and a “labeled line” in an entirely new sense: The line is not a neuron or pathway, but a numbered channel. The number is denoted by a position in a sequence and/or by a biochemical marker.

We have suggested how visual memories might be stored, but we have not yet addressed the problems of how these memories are written and positioned in time. However, it can be anticipated at this point that storage and retrieval — especially retrieval — will put memories into motion. If you were to watch storage or retrieval with an instrument, you would probably characterize them as “processes in a population.” But the memory itself is a thing in a place and that thing is, amazingly, a number.

Content addressable memory

At this point we have chosen a “thing to be remembered,” which is a pattern of bleached disks; and we have a means of storing a numerical representation of this pattern of disks, as a trio of channel numbers associated with three isolated channels in a “twig” of memory.

To help determine what sort of read/write machinery is required for this visual memory model, we should establish here that what I hope to emulate is a content addressable memory in the manner of Pieter van Heerden’s optical memory invention.

The “content” is an incoming image from the retina. The job of the memory is to match it with a remembered image or images. For example, suppose the incoming image is a face. The memory will be ransacked for scenes – images – in which that particular face has appeared in the past – the class picture, for example. The effect is to place the incoming image into a physical context supplied by memory.

Van Heerden’s approach calls for a comparator/detector. Think of the comparator as a frame in which the incoming image from the eye is mounted. Against this standard image, the memory will pump up and superimpose past images until it achieves a match. In the van Heerden machine, memorized images matching the input image would be displayed on a screen. In an analogous brain, the matching images or “hits” would be surfaced into conscious thought.

All these cut and try operations are carried out in the frequency domain, that is, using images from the Fourier plane of the eye and stored fragments of images pumped out of memory – also in the Fourier plane.

Two Fourier patterns overlaid for comparison. Upper image is a cat, lower image is a duck. Images courtesy of Kevin Cowtan. The two patterns are roughly aligned on their DC spots. In the model, the red spot corresponds anatomically to the position of the fovea. One image, let’s say the duck, arrives from the retina. Each of its pixels is “live”, generated by a photoreceptor cell. The cat image is one of a series of images — a film strip — pumped from the visual memory for comparison.

Two Fourier patterns overlaid for comparison. Upper image is a cat, lower image is a duck. Images courtesy of Kevin Cowtan. The two patterns are roughly aligned on their DC spots. In the model, the red spot corresponds anatomically to the position of the fovea. One image, let’s say the duck, arrives from the retina. Each of its pixels is “live”, generated by a photoreceptor cell. The cat image is one of a series of images — a film strip — pumped from the visual memory for comparison.

The Similarity Detector

Van Heerden’s detector was styled as a similarity detector. Two superimposed Fourier patterns that happened to be identical would produce a maximal additive response from this detector – a hit.

Less similar images would generate responses of lesser amplitude. One could set the sensitivity of the detector to select for only a few images of the very highest similarity. Or one could dial down the sensitivity so that it would register many sort-of-similar images.

In the initial discussion of the van Heerden memory at the conclusion of Chapter 4 we used the example of a racehorse presented to the comparator/detector. The literal, photographic image of the racehorse is automatically registered in the retina of the eye as a Fourier pattern.

If the similarity detector were maxed, it would only record a hit for the image of a specific horse – War Admiral, for example. Dialed down, the detector might report any racehorse, a stableful of them. Further down, it might report a hit for any image of a horse or horselike animal, including not only racehorses but ploughhorses, donkeys and burros.

At a still lower level of sensitivity, the detector might record hits for many, many images of four-legged animals: zebras, pigs, giraffes and rats. This simple grouping of four legged animals has the germ of abstract reasoning, accomplished without resort to logic circuitry, made possible by filtering and comparing Fourier planes.

At a still lower level of sensitivity, the detector might record hits for many, many images of four-legged animals: zebras, pigs, giraffes and rats. This simple grouping of four legged animals has the germ of abstract reasoning, accomplished without resort to logic circuitry, made possible by filtering and comparing Fourier planes.

There are various other good reasons for feeding Fourier images, rather than literal images, into the comparator/detector from both the eye and the memory. Perhaps the first is that all this activity is “invisible” to the human consciousness, which is only aware of spatial (literal) images. For this reason we are not subjected to a distracting bombardment of past images as the brain seeks a fit between the present image and a library of past images. One is made aware of the results, but the search is carried out behind the scenes.

The structure identified as the “retina of memory” is film to record incoming images from the eye. It could also serve as the picture frame for a comparator/detector. Each pixel of a recalled Fourier pattern – a candidate for a match with the incoming Fourier pattern on the eye’s retina — is generated by a twiggish anatomical structure in the brain antipodal to the photoreceptor. For these remembered individual pixels, we are using the metaphor of twigs in a tree in a forest. The twigs are memorized pixels. The tree trunk is the brain’s photoreceptor analog. The forest is the store of human visual memory.

Film strip memory

Are we really on our way to a content addressable memory? Not in the sense of van Heerden’s. In the original holographic memory he invented the incoming image was projected through a solid block of recording material. The image essentially “found” or detected its own likenesses at z-axis positions within that block. The query and discovery process was almost instantaneous because the system operated at the speed of light. Coherent light at that.

In the multichannel nervous system, given the specific bits of machinery we have accorded ourselves for model building in this speculation — a true van Heerden memory seems out of reach. It may well be biologically possible, but I am not seeing a way to reproduce it from the parts list now in hand.

The type of memory we can most easily create using multichannel neurons is a stack memory. Each incoming image is time stamped – given a position in a sequence of images, a film strip. Retrieval consists of running the film strip of memory backwards and forwards to discover a match, from visual memory, for the incoming image on the retina.

The content of the image on the retina cannot be said to “address” the memory. It can only be said to establish a standard of similarity for images addressed by another means – specifically, counting and commutation.

It seems to me that because of its technical simplicity, a stack memory would be plausible as a primitive animal memory. For a creature chiefly interested in the Now versus the Just-Now, the purpose of memory is to notice changes. This includes changes in the apparent size and direction of movement of a predator or prey. Stack memory, a short film strip essentially — is adequate for these purposes. A slightly deeper stack memory could help the animal mark the all important distinction between predator and prey.