Chapter fifteen

The brain of

the Denisovan girl

Photo by Dmitry Artyukhov. From a tiny finger bone discovered by archaeologists in the Denisova cave dig, it was possible to sequence the genome of a 5-year old girl who lived here 80,000 years ago. A comparison of her genes with ours triggers some speculations about visual memory, the conflict between visual and verbal thinking, and the mechanics of synesthesia.

The cave of St. Denis

Denisova Cave, in the Altai mountains of southern Siberia, was named by the locals for a hermit who moved into the cave around 1750. For some reason he called himself Saint Denis – hence the name, Denisova.

The original Saint Denis was martyred in Paris in AD 250. After his head was chopped off, Saint Denis is said to have picked it up and walked ten kilometers, descending from the heights of Montmartre with his head held high, preaching a sermon all the way down. That severed “talking head” is such a recurring theme in stories about saints that scholars have given it a name. It is called a cephalophore.

The Denisova Cave in Russia has been escavated systematically by archaeologists since the early 1980s. In summer it is a lovely remote spot. From the mouth of the cave there is a commanding view of the Anui river and its valley, 90 feet below. In the valley scientists have constructed a base camp with housing, working and meeting facilities. The interior of the cave is criss-crossed with taut lines to impose a precise coordinate system on its spaces and volumes. Twenty layers, corresponding to about 300,000 years, have now been escavated. During the past 125,000 years the cave has been inhabited at various times by modern humans, by Neanderthals and, we now know, by yet another type of archaic human – the Denisovans.

Each summer, young people from surrounding villages are hired to trowel and sift in the cave for tools, bits of tools, animal and human bones and teeth and whatever else might be caught in a sieve retaining any and all objects larger than 3 to 5 mm. Objects captured in sieves are labeled to show the exact location of the find, washed, and bagged for subsequent scrutiny by scientists. Sometimes in the afternoons, the kids break their concentration and play volleyball outside the cave.

In 2008 one of the workers bagged a tiny bone. A Russian archaeologist thought the bone might have come from an early modern human. He sent a fragment of the bone for DNA sequencing to Svante Pääbo at the Max Planck Institute for Evolutionary Anthropology in Leipzig, Germany. Svante Pääbo is famous. He pioneered the field of sequencing ancient DNA. In October, 2022, he was awarded the Nobel Prize for Physiology or Medicine for his discoveries concerning the genomes of extinct human species and human evolution.

The bone from Denisova turned out to be the fingertip bone from a little finger of a 5-year old girl who lived 80,000 years ago.

From the girl’s DNA we know she was not a modern human, nor was she a Neanderthal. She is representative of a previously unknown type of archaic human, perhaps a cousin of the Neanderthals, now styled as Denisovans.

Two molars sifted from the same layer in the cave belonged to two other Denisovans. This brought the total haul of Denisovan remains to just three objects. The two teeth are primitive and enormous. In July, 2017 it was reported that another tooth, originally found in a deeper layer of the Denisova cave in 1984, had been identified, by its mitochondrial DNA, as the tooth of one more Denisovan. This fourth Denisovan lived 50,000 to 100,000 years before the Denisovan girl.

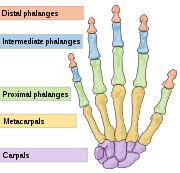

Other than her fingertip (the distal phalange of her little finger) there are no fossil remains of the girl. There is no associated skeleton. The fingertip bone is smaller than a baby aspirin. Her age was deduced from the immaturity of the bone. From her DNA we can say that in life she had brown hair, brown eyes, and brown skin with no freckles. And big back teeth.

Other than her fingertip (the distal phalange of her little finger) there are no fossil remains of the girl. There is no associated skeleton. The fingertip bone is smaller than a baby aspirin. Her age was deduced from the immaturity of the bone. From her DNA we can say that in life she had brown hair, brown eyes, and brown skin with no freckles. And big back teeth.

Her fingertip is unique among archaic human fossils in that the ratio of her own DNA to encroaching microbial DNA is 70% vs. 30%. Values in other fossils found in temperate climates (e.g., most Neanderthal bones) are not remotely this rich in endogenous DNA, and typically range from 1% to 5%. One of the Denisovan teeth found in the same layer as the girl’s fingertip has just .17%.

It is speculated that the fingertip bone was somehow quickly dessicated after death, and that this put a stop to the enzymatic degradation of her DNA and to microbial growth. A quick dessication might thus account for the high concentration of endogenous DNA. I wondered if perhaps the finger dangled from and was dried out by a funeral pyre. In any event, thanks to good luck and a new sequencing technology, this extinct girl’s genetic blueprint has triumphed over time: 80,000 years after her death, her genome was transcribed from her fingertip’s lode of endogenous DNA.

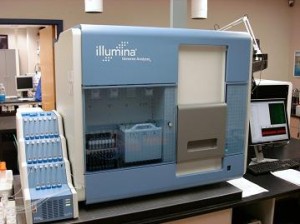

By late 2012, three different sequencing efforts based on DNA from the Denisovan girl’s fingertip had been published. The first paper reported the sequence of her mitochondrial DNA. The second reports a rough draft sequence (coverage 1.9) of her nuclear DNA. The third paper, which is a technological tour de force, was published in Science online on August 31, 2012. It reports a novel sequencing technique that was essentially invented for the Denisovan girl.

The first author is Matthias Meyer, who did the work as a post-doc in the lab of Svante Pääbo. Meyer’s new sequencing method, which was patiently and painstakingly developed, is specifically designed to recover sequences from degraded ancient DNA. Automatic sequencing machines are designed to operate on double stranded DNA but in degraded ancient samples, double strands of DNA have unraveled into single strands. Meyer’s technique starts from a library of single stranded DNA. The finished product is truly remarkable. The sequence for the extinct Denisovan girl is comparable in coverage (quality) to sequences we can read from contemporary walking-around modern humans.

Automatic sequencing machines are designed to operate on double stranded DNA but in degraded ancient samples, double strands of DNA have unraveled into single strands. Meyer’s technique starts from a library of single stranded DNA. The finished product is truly remarkable. The sequence for the extinct Denisovan girl is comparable in coverage (quality) to sequences we can read from contemporary walking-around modern humans.

Comparative human genomics

For the first time, it became possible to compare in detail the genome of a modern human with that of an archaic human, the Denisovan girl.

It is a comparison that is bound to be full of tricks and traps, since we know a lot about ourselves but almost nothing about the Denisovan phenotype. For example, it is a huge temptation to overlay these two slightly different genomes in an effort to discover the genetic reason why we are so smart and why, by implication, the Denisovans were so dumb. A problem here is that we think we are pretty smart but we don’t actually know the Denisovans were not.

Another thing we don’t know is what happened to the Denisovan girl. She died in childhood. She may have had an inborn error of metabolism or may have been, by Denisovan standards, genetically abnormal in some other way. She is just one individual. One should not generalize — but it is of course impossible to resist generalizing.

As it turned out, her genome and the modern human genome are quite close. There were (about) 112K single nucleotide changes and 9500 insertions and deletions that have become fixed in modern humans. In a genome of 3 billion base pairs, this a small number.

Many single nucleotide changes (SNC’s) can be ignored because they do not produce amino acid changes upon translation. Viewed in this way, it is possible to tabulate a list of what seem to be the most important genetic differences that distinguish us from the Denisovans:

• 260 single nucleotide changes (differences) that account for fixed amino acid substitutions in well defined modern human genes.

• 72 fixed single nucleotide changes that affected splice sites

• 35 single nucleotide changes that affected well defined motifs inside regulatory regions

We are past the notion that one gene => one polypeptide, but we don’t know what to expect in the way of post transcriptional manipulation of gene products. Alternative splicing can multiply or change gene products. There is silencing at the level of mRNA and there are probably regulatory systems we don’t yet understand or know how to recognize. But the rhetorical direction we are following here is to pare down the list of 260 genes and narrow our focus, rather than explode it.

One way to arrive at a short list of interesting genes is to concentrate on human genes that are different from the Denisovan genes and are also known to be associated with specific diseases. The genetic change does not necessarily imply there is some new way to parry a disease process in modern humans. The disease association simply points to the organ or system in which the changed gene operates. From the paper:

“Of the 34 genes with clear associations with human diseases that carry fixed substitutions changing the encoded amino acids in present-day humans, four … affect the skin and six … affect the eye. Thus, particular aspects of the physiology of the skin and the eye may have changed recently in human history.”

The shortest list

Following another tack, the researchers surfaced a different, even shorter list. It consists of just 23 genes. These 23 could be regarded as most decidedly modernist genes — in the sense that these genes are indeed unique to modern human beings. The original sequences for these 23 genes are almost perfectly conserved (intact, unmodified) in non-human primates, including chimps, gorillas, and organutans. The genes are also conserved in the presumably somewhat apelike Denisovan girl. But in modern humans the old ape sequences have been decisively modified, in each of the 23 genes, to produce a new gene and a new gene product. From the paper:

“We note that among the 23 most conserved positions affected by amino acid changes (primate conservation score ≥ 0.95), eight affect genes that are associated with brain function or nervous system development (NOVA1, SLITRK1, KATNA1, LUZP1, ARHGAP32, ADSL, HTR2B, CNTNAP2). Four of these are involved in axonal and dendritic growth (SLITRK1, KATNA1) and synaptic transmission (ARHGAP32, HTR2B) and two have been implicated in autism (ADSL, CNTNAP2). CNTNAP2 is also associated with susceptibility to language disorders (27) and is particularly noteworthy as it is one of the few genes known to be regulated by FOXP2, a transcription factor involved in language and speech development as well as synaptic plasticity (28). It is thus tempting to speculate that crucial aspects of synaptic transmission may have changed in modern humans.”

Here are some things that are thought to distinguish us from apes: We talk. We learn to watch each others’ eyes for clues about what someone else may be thinking or feeling. We empathize. We think fast. We make art, use symbols, and adorn ourselves with jewelry. (These shell beads, possibly from a necklace, were found by archeologists in the Blombos cave in South Africa. The shells were perforated with a tool 75,000 to 100,000 years ago.)

Here are some things that are thought to distinguish us from apes: We talk. We learn to watch each others’ eyes for clues about what someone else may be thinking or feeling. We empathize. We think fast. We make art, use symbols, and adorn ourselves with jewelry. (These shell beads, possibly from a necklace, were found by archeologists in the Blombos cave in South Africa. The shells were perforated with a tool 75,000 to 100,000 years ago.)

Could she talk?

There is a subtext here: Maybe one gene, or an ensemble of genes that could be picked out from among these 23 utterly modern human genes — has made us smarter, more talkative, and more socially adroit and bejeweled than apes and archaic humans. In other words, maybe now we can draw lines between the characteristics that make us modern humans and the genes that make us modern humans.

The key to human behavioral modernity — our giant step up from apehood — was human language. In comparing the Denisovan girl’s genome with that of modern humans, an implicit and persisting goal is the discovery of genetic substitutions in modern humans that somehow started us talking.

A corollary assumption is that Denisovans, Neanderthals and early modern humans could not talk or could only just barely manage it.

FOXP2 and the Upper Paleolithic Revolution

These ideas and assumptions about language and the timeline of human progress have a surprisingly short history. In the late 1990s and early 2000s, it was strongly argued that culturally modern humans emerged, talking, as recently as 50,000 years ago. By that time, homo sapiens had been around for about 150,000 years but he had not accomplished much. He made and used tools and weapons but did not progress, over the span of 150,000 years, to newer and better tools and weapons. He seemed to have been stuck, repeating himself, as though he were unable to invent.

Behavioral modernity, when it arrived, brought excellent innovations in tools and weapons, the use and recognition of symbols (signaling a facility with language), jewelery and impressive art. According to this narrative the sudden success of modern humans led to their energetic expansion, about 40,000 years ago — out of Africa, into Europe and Asia, the South Pacific and ultimately across the world and into the sky.

The hypothesis of an abrupt human breakthrough to behavioral modernity was styled in the literature as the Upper Paleolithic Revolution. Probably its most vigorous and convincing proponent was the archaeologist Richard Klein at Stanford. For a snapshot impression of Klein and his thinking in 2003, when the concept of the Upper Paleolithic Revolution may have been at its zenith, see this archived article entitled “Suddenly Smarter” from Stanford Magazine.

It seemed to Klein the revolution in human progress could have come about so suddenly because of an underlying biological change. One that archeologists could read in ancient bones was the lowering of the modern human larynx. This occurred about 50,000 years ago. The lowered larynx was thought to have facilitated human speech.

In 2001 a defect in single gene, FOXP2, was found to be responsible for a serious familial language disorder. At that time it was the first and only gene clearly associated with the human gift for language. In 2001 it was an easy jump to the idea that a changed FOXP2 gene in modern humans triggered the revolution. A mutation in the FOXP2 gene, an abrupt biological shift, might have enabled homo sapiens to start talking. From language, human success could have followed immediately. Language enabled us to create, share and steadily expand a common store of modern human wisdom, lore and technology.

The pursuit of FOXP2

With the revolution in mind, let’s fast forward to the 2012 Science paper on the genome of the Denisovan girl, and specifically to the discussion of how modern humans are different from this archaic girl and from her apelike cousins and ancestors.

It seems meaningful that eight of the 23 changed genes in modern humans are known to affect the nervous system, including the brain. But it is of course unclear how our modern human nervous system ultimately differs, in operation, from those of the archaic humans and apes.

In the paper there is a rhetorical bridge leading to FOXP2, which is still famously associated with language and vocalization. It orchestrates neurite growth in development. However, at the level of protein, the FOXP2 transcription factor is identical in modern humans, Neanderthals, and Denisovans.

This made it appear that any hoped-for alteration in the FOXP2 protein — a changeover leading to modern human success perhaps — wasn’t there. But in a subsequent paper, Tomislav Maricic et al of Pääbo’s group reported that the typical modern human FOXP2 gene does in fact differ from that of archaic humans and apes. There is a cryptic one base change hidden in an intron, within a binding site for a transcription factor that modulates FOXP2 expression.

However, this modernistic mutation is not found in 10% of contemporary modern humans in Africa. Their FOXP2 intronic binding sequence is identical to that found in archaic humans and apes. They can talk, so this subtle mutation does not, after all, auger for or help explain a modern human breakthrough to language.

FOXP2 comes up again in a March, 2016 Science paper which reports the genome sequencing of 35 living Melanesians. Both Denisovan DNA and Neanderthal DNA survive in Melanesians. The Denisovan component varies between 1.9% and 3.49%. But the paper describes “deserts” of archaic DNA in these individuals. The deserts are long passages in the genome where Denisovan and Neanderthal DNA are not found at all. Deserts are thus places where the differences between the archaic humans and ourselves are most pronounced. One might imagine that the archaic DNA had been depleted — culled and shucked in favor of modern human DNA.

In a stretch of DNA on chromosome 7, for example, no Denisovan or Neanderthal DNA appears. The researchers measured the enrichment of modern human genes in this stretch. “Enrichment” has a special meaning in this context but one would not go too far wrong to take it literally. The list of enriched genes includes FOXP2. It also includes two or three genes associated with autism. The implication is that genes associated with the development of modern human language — and with things that can go wrong with the development of modern human language — are to be found in a stretch of DNA on chromosome 7 that is unique to modern human individuals. So the idea that FOXP2, the “language gene” or “grammar gene” somehow brought the revolution persists. But did a revolution actually occur?

… a revolution that never was?

Today many and perhaps most archeologists believe the Upper Paleolithic Revolution never happened. What at first seemed to have been a revolution that occurred as recently as 50,000 years ago has been smeared out across the axis of past time by discoveries of artifacts signaling human modernity from sites dated deeper and deeper into antiquity. A nice cultural distinction between Neanderthals and modern humans has also been smeared out: protein analyses show that Neanderthals in France made jewelry. In 2018, it became evident the Neanderthals also made art.

And the lowering of the larynx 50,000 years ago cannot be said, after all, to have enabled or facilitated human speech. Computer modeling of the hyoid bone of Neanderthals suggests they could have spoken just as readily as any modern human. Neanderthals were perfectly able to talk and in the view of some researchers, they probably did talk. If so then their near cousins, the Denisovans, probably talked as well. In this view human language evolved over a long period, perhaps a million years.

Language is complex. It does seem realistic to imagine that it evolved over the span of a million years — not suddenly, in just 50,000 years or less.

The gene encoding FOXP2 is no longer the only gene known to be associated with human language. A few others have been discovered over the years but FOXP2 is still one of a small set.

FOXP2 is a transcription factor. It is well conserved in mammals. In a mouse embryo, the mouse version of FOXP2 orchestrates the transcription of 264 other genes. Sometimes it enhances transcription but often it suppresses transcription.

The 264 targeted embryonic genes build and configure neurons. They affect the length and branching of neurites. It’s a tantalizing process, but it seems doubtful we will soon discover in this complicated type of gene network a simple fork in the road that led to our gift for language.

So where are we? Did human language evolve over the span of a million years? Or did it tumble into place all of a sudden between 100,000 and 50,000 years ago, and thus launch a revolution in modern human progress and prowess?

Which?

Well, we don’t necessarily have to guess which. We can guess both, as follows:

Two conflicting modes of communication. One wins.

Here is a possibility we will explore later in this chapter: Suppose archaic humans, early modern humans and our common ancestor relied upon a mode of communication that was based on vocalizing, visualizing and, above all, synesthesia. It was not a verbal, grammar-based language but it was highly evolved. Imagine that a carefully vocalized sound made by one human was directly transmuted, in a nearby human listener’s brain, into a remembered image or a remembered scent or both.

Without using words like “Here comes a bear” — using only a vocalized sound like a hoot or a yelp — the image and scent of an oncoming bear was made instantly obvious to every human being within earshot.

Further suppose this old, rather animalistic mode of early human communication worked beautifully but it inhibited the development of a newer, slowly emerging mode of communication: a verbal and grammatical language. A conflict between the old and new modes of communication arose because both depended upon vocalization.

Something about the new second language based on words conferred an advantage. It could tell a more complete story. Instead of the warning cry, a yelp that conveyed the image and scent of a bear, verbal language could convey a detailed idea and position it in space and time: “A mother bear and her two cubs were sighted across the river yesterday morning and they were drifting this way.” Maybe verbal language was much quieter — useful on a hunt. Maybe it was sexier. Evolutionary pressure began to strongly favor verbal language over the old, established synesthetic mode of communication.

According to this hypothesis, genetic changes turned off the older mode of vocal communication in modern humans. The changes gradually suppressed or excised the brain machinery that supported the old, synesthetic mode of communicaton. Once the older, rival mode of vocal communication was silenced, modern verbal language was quickly perfected. A revolution in human progress did in fact ensue for late modern humans. It is an open question when the switchover may have occurred and how long it took to accomplish it. But it was revolutionary.

In short, one mode of vocal communication, which we call language, supplanted another, older mode of vocal communication for which we have no word. The old, synesthetic mode of communication was the template against which the new mode, verbal language, was created.

The creation of verbal language may have taken a long time but the switchover from one mode to the other could have been abrupt. The genetic changes that made the changeover happen constitute a developmental OFF switch.

Per this scheme, FOXP2 might function broadly in modern humans as an OFF switch for an archaic and obsolete brain configuration. Once the old brain was blocked from development the old mode of vocalized, synesthetic communication was deeply suppressed. The new talking brain, with its ability to reason in words and communicate with words, suddenly worked better. Ultimately it worked brilliantly.

Obviously this is speculation.

Where were they?

From fossils, we know in an approximate way where the Neanderthals lived. But until 2019, all confirmed Denisovan fossils had been found in a single cave in Siberia. In 2019, a jawbone found decades before, in a cave high on the Tibetan Plateau in China, was identified as Denisovan. So our sketchy sense of the geographic distributions of Denisovans is necessarily based on genetic fossils, that is, Denisovan genes and sequences that have survived in present day populations of modern humans. These genes were “introgressed” into modern humans in intermarriages between the species. The first concentrations of Denisovan genes identified in modern humans were found, surprisingly, in Aborigines and Melanasians, who live very far from the Siberian cave of the Denisovan girl.

One can use a computer to scan thousands of published modern human genomes for stretches of DNA that may have been introduced by interbreeding with archaic human species. These searches can be conducted without reference to Neanderthal or Denisovan genomes, in effect hunting for DNA that, per several technical criteria, doesn’t seem native to the modern human genome.

A novel technique for a scan of this type was reported by Sharon R. Browning et al in Cell in 2018. Using it, the authors were able to determine that modern humans interbred with Denisovans at least twice. In addition to the stretches of Denisovan DNA already identified in 2012 in Australian aborigines and Melanesians, it now appears that there was another instance of introgression of Denisovan DNA into the modern human genome. This DNA is detected in populations in East Asia, but not among Melanesians and aborigines.

Here is a schematic map published in the Cell paper — a genetic geography of Denisovan and Neanderthal DNA found in contemporary modern human populations. Pink is Neanderthal DNA. Light blue indicates Denisovan DNA that is most closely related to that of the Denisovan girl. Dark blue indicates Denisovan DNA that is less closely related to that of the Denisovan girl.

The two blues denote two distinct instances of interbreeding between Denisovans and modern humans, apparently involving two different, geographically and/or temporally separated populations of Denisovans. Nothing in the data to this point tells us the order in which these long ago episodes of love-making between modern humans and Denisovans occurred.

One idea is that some Melanesians, having picked up stretches of “dark blue” Denisovan DNA, migrated back north into Asia, and thus introduced the dark blue Denisovan DNA, along with their own, into the far eastern population of modern humans.

In addition to an apparent East-West distribution of Denisovans and Neanderthals (with a lot of overlap) there is a compelling argument that at some point in history, the distribution of these two different human species was vertical. Denisovans were specially adapted for life at extremely high altitudes.

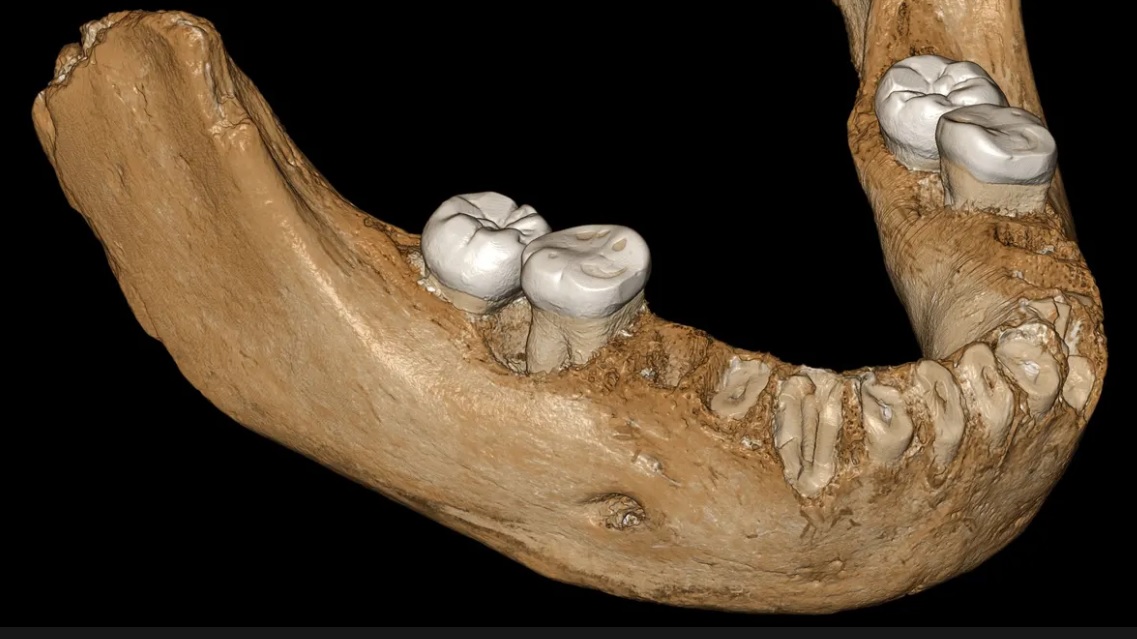

On May 1, 2019, Nature published a letter reporting that a big, powerful looking archaic human jaw bone discovered in a Chinese cave high on the Tibetan Plateau — had been identified (by protein sequencing) as the jaw of a Denisovan. The jaw was dated to ~160,000 years ago.

The cave’s entrance is at 3,200 meters. This is about 10,500 feet above sea level, or two miles high. The Denisovan genome suggests they were specially adapted for life where oxygen is thin. To put the altitude of their chosen cave into perspective, note that pilots of unpressurized planes must resort to supplementary oxygen if they fly above 10,000 feet for 30 minutes or more. Above 12,000 feet they are required to breathe supplementary oxygen continuously.

Modern humans in Tibet have been shown to carry a gene of Denisovan origin that helps them cope with high altitude.

This jawbone was the first Denisovan fossil that was not found in the Denisova cave in Siberia. It is also the first Denisovan fossil that is large enough to give us a sense of the size of an adult Denisovan. The individual was big, perhaps more hulking than a typical Neanderthal, with a bigger brain and a heavy build, and weighed perhaps 200 lbs. One might guess the Denisovans were by some means well insulated against the extreme cold of their lofty, thinly oxygenated environment. The photo (Jean-Jaques Hublin, Max Planck Institute for Evolutionary Anthropology, Leipzig) shows a whole jaw reconstructed using software — essentially to mirror in 3D the half-jaw found in the cave. A mineral encrustation that obscures the underlying bone of the real fossil was deleted from the 3D model.

The hybrids

In the August 6, 2018 issue of Nature, Viviane Slon of Svante Pääbo’s group reported her analysis of a genome extracted from a bone from a second “Denisovan girl.” She lived to be about 13 years old ~90,000 years ago. Half her DNA was contributed by her Neanderthal mother and half was contributed by her Denisovan father. Her father’s Denisovan DNA showed that he brought to the marriage an ancient trace of Neanderthal ancestry.

In this single find, we can see evidence of two intermarriages between Neanderthal and Denisovan individuals. This suggests the practice was not uncommon. One could speculate, I suppose, that these two archaic human species were ultimately amalgamated into one. This might explain why we have not found more Denisovan fossils. In any event, archaic hybrid individuals could write stretches of both Denisovan and Neanderthal DNA simultaneously into their offspring.

As for modern human and Denisovan hybrids, it is a mystery story. Denisovan DNA sequences are unquestionably found where they are found in modern human populations. Exactly how and when they got there remains a puzzle and there are of course various competing hypotheses.

Autism

At this point, what jumps out from the listing of 23 modernized human genes, and again from the report on modern human gene enrichment on the modern human chromosome 7, is the curious implication of a few genes associated with autism.

Autism is an umbrella word. Autism is such a broad diagnosis that it can include people with high IQ’s and mental retardation. People with autism can be chatty or silent, affectionate or cold, methodical or disorganized. Until 2013 there were five formally recognized forms of autism. This list was reduced to three broader type definitions in 2013. Because autism is a diagnosis it is regarded as an illness, disorder or condition. But it can also be understood as a gift.

Many people who have been diagnosed as autistic have an astonishing gift for visualization and pictorial thinking. It has been suggested and we will urge here that this gift is an atavism — a re-expression or resurgence of an ancient style of thinking. Following is some speculation about this possibility, a gathering of cards that have now landed face up on the table.

The Aboriginal mélange

The UCLA sociologist Warren Tenhouten spent many years studying the culture and uncommon intelligence of Australian aborigines. Evidently the aborigines have very strong visual pattern recognition gifts. One of Tenhouten’s books, Time and Society, also includes a succinct history of the aborigines, which I recommend.

Modern humans in prehistoric Europe are thought to have interbred with the Neanderthals. As much as 5% of the DNA in the genomes of contemporary Europeans is Neanderthal. My own DNA is 2.9% Neanderthal. The people we now characterize as aborigines worked their way east from Europe and carried with them this typical Neanderthal fraction of up to 5%.

Australian aborigines and Melanesians are descended of modern human contemporaries of the Denisovans. Their ancestors perhaps interbred with the Denisovans. Up to 6% of aboriginal DNA is like (highly homologous to) that of the Denisovan girl.

However, it is not at all clear who may have interbred with whom. Another intriguing hypothesis has both Denisovans and the aborigines acquiring their “Denisovan” DNA from homo erectus. Note as well that the convenient West=Neanderthal and East=Denisovan story is not absolutely solid, since Denisovan-like sequences were identified in an ancient femur from Spain, Europe’s far west. One might explain this by suggesting the femur belonged to an ancient human who lived sometime before the split between the Neanderthals and the Denisovans. But it is a muddle.

We have definitely learned, however, that by whatever pathway, direct or circuitous, up to 6% of aboriginal DNA is like that of the Denisovans.

We have also learned that four or five genes among the hundreds that have been found to be associated with autism in modern humans may have somehow figured into the story of what distinguishes modern humans from the Denisovans. Aborigines have a gift for visual thinking. Such a gift is also common among autistic people. There are no logical or factual bridges here, but there are some intriguing associations.

Aborigines have a quick intelligence but Tenhouten reported that it is not the typical, verbal, “left brain” intelligence that schoolteachers praise, reward and prize. Instead it is “right brain” or visual intelligence. Incidentally, Australian aborigines are frequently born blond. Their blond hair tends to turn brown as they grow up.

Tenhouten was apparently working in an epoque when strict right brain and left brain lateralization was still an accepted idea. It was based on studies of split brain patients conducted in the 1960s. A Nobel was awarded to Roger Wolcott Sperry for this work in 1981.

Today, however, the partitioning of the normal brain into Left/Right lateralized skill sets is regarded as a myth. The skill sets are not mythical. There are indeed visual talents and verbal talents, and they are distinct from each other. But fMRI scans suggest there is no neat left/right anatomical divide between visual and verbal “brains”. As conversational shorthand, however, left brain and right brain are still useful and widely used.

Of the various capabilities that were once attributed to “right brains”, I will pick out and emphasis here visual pattern recognition. Interestingly, it was thought the right brain tended to fix upon patterns as outlines, and to ignore details within. Wherever in the brain this re-imaging process happens, it produces exactly what we would expect of an image that has been Fourier filtered to select for high spatial frequencies.

Click to run a Google search on aboriginal art. Notice the strong edge outlining, and imagery that could be interpreted as diffraction patterns.

Also note the rather typical mélange of literal, spatial images, as for example, outlines of animals — with what appear to be diffraction patterns. These same characteristics can often be picked out in Picasso’s work. Maybe these are artistic mannerisms. But it is also possible these mixed spatial domain and frequency domain images can tell us something about how the brain reads the retina.

In good light these two different types of images are always accessible at two different depth levels in the retina. The eye’s lens automatically projects toward the retina both a diffraction pattern and a literal picture. The diffraction pattern is shallow, the literal picture is deeper.

See Chapter 7 for a discussion of how images are formed. The diffraction pattern is an integral part of the process of picture formation in the eye. Without the diffraction pattern there can be no picture.

Most of us are conscious of only literal, spatial picture images. The diffraction patterns that produce them are offstage, behind the scenes, outside of our consciousness — we never see them.

But some special people, including aboriginal artists, are apparently aware of and able to draw on canvas the diffraction plane as well as the literal picture plane.

The integration of the two optical planes in art is incredible. It seems to imply an intuitive understanding that one pattern produces the other, that diffraction begets an image. As indeed it does.

Physicists perfected their understanding of how images are formed in the mid-1930s after many decades of work on the problem. The solution is a mathematical description of what happens to light in a process the aboriginal artists seem to be able, somehow, to actually watch or intuit.

Thinking in pictures

The exceptional visual talents of the aborigines have become interesting in a new way. See this excerpt from Thinking in Pictures by Dr. Temple Grandin, who is autistic. Here are some remarks quoted from her book:

“I THINK IN PICTURES. Words are like a second language to me. I translate both spoken and written words into full-color movies, complete with sound, which run like a VCR tape in my head. When somebody speaks to me, his words are instantly translated into pictures. Language-based thinkers often find this phenomenon difficult to understand…

“One of the most profound mysteries of autism has been the remarkable ability of most autistic people to excel at visual spatial skills while performing so poorly at verbal skills. When I was a child and a teenager, I thought everybody thought in pictures. I had no idea that my thought processes were different.”

“I create new images all the time by taking many little parts of images I have in the video library in my imagination and piecing them together. I have video memories of every item I’ve ever worked with….”

“My own thought patterns are similar to those described by Alexander Luria in The Mind of a Mnemonist. This book describes a man who worked as a newspaper reporter and could perform amazing feats of memory. Like me, the mnemonist had a visual image for everything he had heard or read.”

In a later book, The Autistic Brain, Grandin remarks that her own subjective experience of autism is not necessarily a depiction of autistic thought processes in general. Specifically, her gift for “thinking in pictures”, and her sense of memory as a movie or a video library, is common to some but not all autistics.

It is interesting, however, that she identifies her ability to think in pictures with that of Alexander Luria’s patient, the mnemonist, Solomon Shereshevsky.

Solomon V. Shereshevsky 1896-1958. This photo is a frame grab from a 2007 documentary film produced for Russian television, Zagadky pamyati [Memory mysteries]. For the filmmakers, Lyudmila Malkhozova and Dmitry Grachevthe, the Shereshevsky family made available photos and new biographical details about the celebrated mnemonist. A biographical sketch of Shereshevsky was published in 2013, in English, in a journal article in Cortex by Luciano Mecacci.

Was “the mnemonist” autistic?

Probably so.

Luria, a neuropsychologist, began studying Shereshevsky in the 1920s. The word autism had been coined in 1910 by the Swiss psychiatrist Eugen Bleuler. (Bleuler also coined the term schizophrenia). In the 1920s autism had a narrowly defined, fairly precise meaning. It meant selfism, i.e. a self-absorbed or self-contained behaviour observed by Bleuler in adult schizophrenic patients.

In the 1920s, Luria could not have had anything like our concept of autism in its broad, mutable and rather cloudy 21st century sense as a spectrum of behaviours. So Shereshevsky might or might not have fallen “on the spectrum” of autism.

Here is link to a chapter from a 2013 book, Recent Advances in Autism Spectrum Disorders – Volume I. The author, Miguel Ángel Romero-Munguía, concludes that per the diagnostic criteria of our own epoch, Shereshevsky was most likely autistic. But there is obviously no way, in the 1920s, that Luria could have diagnosed Shereshevsky as autistic in the modern sense of the word. In fact, Luria diagnosed Shereshevsky as a 5-fold synesthete.

It was synesthesia that seemed to hold the secret of his incredible powers of memory. His memory was visual — photographic or filmic — but it was modified, painted with special cues one might say, thanks to his synesthetic gift and to his self-taught techniques as a mnemonist.

In 2013, it was reported by Simon Baron-Cohen, Donielle Johnson et al that synesthesia is much more common among autistics than among neurotypicals. Synesthesia, which has about a 4% rate of occurence in the general population, had an incidence almost three times higher in an autistic patient population. The result suggests autism and synesthesia are somehow related or are different aspects of the same biological story.

In both conditions, autism and synesthesia, one can posit a sensory system with its gates left wide open.

Autistic childen confront a sensory overload. Often they cannot isolate or quickly focus upon an important input as something distinct from its immensely detailed surround — the total world of a moment received as incoming pixels, sounds, smells, tastes and tactile sensations.

The study raises the hypothesis that savantism may be more likely in individuals who are both autistic and synesthetes. Daniel Tammet, who has both Asperger syndrome (autism) and synesthesia is a famous contemporary memory savant. He memorized pi to 22, 514 decimal places. Tammet inspired the hypothesis that savantism arises in individuals who are both autistic and synesthetes.

Luria’s file on Shereshevsky is still fully preserved in Luria’s archive at his dacha at Svistucha, a village 50 miles north of Moscow. Perhaps a scholarly reading of this material would fill in the evidence Shereshevsky was both autistic and a synesthete.

We already know, however, that the most important thing to Shereshevsky himself was his visual, filmic, stream of memory.

The gifts of both Shereshevsky and Tammet are strongly questioned by skeptics on the net. The idea is that these two mnemonists’ feats of memory could be explained away as the results of applying well understood mnemonic techniques — and that there is no underlying gift or special talent or unusual brain function. In this view, Luria was naive and Shereshevsky was a trickster.

I am inclined to doubt the doubters. The mnemonists’ brains really do seem to be sculpted in ways that are not typical. One cannot ignore or set aside the fact of synesthesia. In addition there are incredible displays of autistic memory power that are purely visual, and are thus difficult to discount as products of professional memorization techniques.

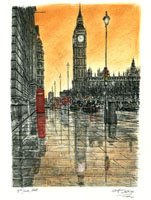

The human camera: Stephen Wiltshire

The most famous autistic artist is Stephen Wiltshire. He specializes in cityscapes. Wiltshire has been characterized in the press as a human camera. He has the ability to draw astonishingly detailed images of scenes he has seen only briefly and only once. He was mute as a child and did not fully gain speech until he was nine.

The most famous autistic artist is Stephen Wiltshire. He specializes in cityscapes. Wiltshire has been characterized in the press as a human camera. He has the ability to draw astonishingly detailed images of scenes he has seen only briefly and only once. He was mute as a child and did not fully gain speech until he was nine.

A facility for thinking in pictures is not uncommonly associated with genius. John von Neumann had this gift. As a child he could scan and then recite from memory pages from the Budapest phone directory. It seemed to observers he was able to simply read aloud from his mental image of those pages.

It has been suggested that eidetic imagery is a gift many and perhaps most children have — and then lose as their verbal skills become seated and then dominant. Maybe it is an instance of ontogeny recapitulating phylogeny. It does happen. Maybe modern humans, like growing children, lost their strong pictorial gifts when they started talking.

Von Neumann’s biographers report that among people who knew him, some people thought he had a photographic memory and some thought he did not. He relished arithmetic (many mathematicians do not) and loved mental calculating. I suspect he probably did retain into adulthood a very literal, visually accessible 2D scratchpad in his head.

Picasso retained his pictorial thinking gift throughout his life. He was able to grid a canvas and then “fill in” picture elements in squares that were remote from each other. When he finished filling in all the squares, the picture was an integrated, coherent whole. Picasso may have been another example of a human camera — but it might be more apt to suggest he was a human projection machine. He could mentally project an image onto a canvas — and then trace over with a pencil this image that only he could see.

Picasso of course thought in pictures but surprisingly, so did an amateur painter whose professions were writing, politics and global warfare — Winston Churchill.