Chapter fourteen

How does visual memory work?

Photo courtesy of Ann Cantelow. The multichannel neuron model ascribes numbers to channels. The channel numbers store and communicate analog data. They can also be used, in a distinct addressing system, to sequentially query the twigs of visual memory.

Photo courtesy of Ann Cantelow. The multichannel neuron model ascribes numbers to channels. The channel numbers store and communicate analog data. They can also be used, in a distinct addressing system, to sequentially query the twigs of visual memory.

Addressing and retrieval

For retrieval, the model requires two types of neurons: 1) an address generating neuron, which drives 2) a data storage neuron. To activate a memory stored as “a thing in a place,” a stored datapoint must be addressed at precisely that place. In the specific case of a stored pattern of three bleached disks imported from a photoreceptor, a trio of associated datapoints, twigs, must be addressed, one right after the other.

The association of a memory with an address is an everyday commonplace of computer technology, but in conventional neuroscience the notion would probably be viewed as too simplistic for a biological brain. Nevertheless, in the multichannel model we have a ready mechanism for generating sequential addresses. The address generator can be the commutator we have postulated at the axon hillock.

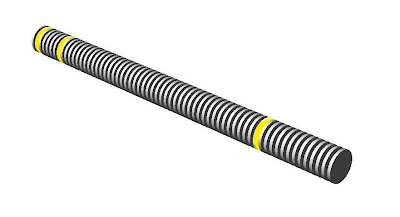

To stimulate the first 9 twigs of memory, #1 through #9, each in turn, requires this sort of circuit. The output lines of the axon driven by the addressing commutator are telodendrions, each corresponding to a channel. In this illustration of this model, telodendrions are numbered in order of their firing. Each individual channel synapses to a dendrite. Each dendrite will be stimulated in its turn, in accordance with the ascending circular order of the addressing commutator.

Each dendrite is a “twig memory”. It stores a channel number that stipulates which channel shall be fired in response to the addressing signal. The effect can be tabulated:

The dendrites, which comprise the twigs of memory in this simple model, are each stimulated in turn. The pattern of bleached disks that each twig has memorized is fired back into the nervous system – precisely replicating the pattern originally dispatched from a single photoreceptor’s outer segment at some time and day in the past. In the table, 9 upticks of the address counter’s commutator correspond to a trio of 3D pixels and 3 frames of a film strip. [A slicker model might use just one address tick to elicit all three datapoints, characterizing intensity, wavelength, phase — but the point is, visual memory is sampled and read out by the ticking of a sequential address counter. It is probably written in the same way.]

All pixels recorded from the retina at the same time, stored in twigs on other photoreceptor antipodal “trees” will have identically the same time stamp in their address. So simultaneously, synchronously, one pixel from every other “tree” or photoreceptor antipode in the retina of memory is being triggered.

The effect is to pump out of memory a stream of past images — each image made up of millions of 3D pixels. The system is massively parallel and, therefore, moves whole images all at once. It is lightning fast.

Why don’t we see these torrents of images from the past? Why aren’t we drowning in images? Because these are not literal images. They are images of the Fourier plane. Fourier images are invisible to us, except perhaps in the special case of LSD users. Literal images may impinge on the consciousness as, in effect, search products, but the search itself is conducted as a Fourier process and is unconscious — offstage and out of sight.

Numbered synapses — new evidence, old idea

The idea there might be some sort of detectable ordering or sequencing of synapses on the dendrites is attributed to Wilfrid Rall, who suggested it in 1964 in support of a wholly different and unrelated model of the nervous system. In the 24 September 2010 issue of Science there is a featured report that reinforces the notion there exists some sort of sequentially ordered input pattern in the dendrites.

In these experiments, a programmed series of successive stimuli is made to “walk” from synapse to synapse along the dendrite. If the stimulus series progresses toward the cell body it is more likely to trigger off action potentials than a programmed series of stimuli that walks the other way, away from the soma, toward the tips of the dendrites.

The front half of this experiment consists of the selective stimulation of a row of individual dendritic spines, one after another, using a laser to precisely localize release of glutamate. The basic technology was outlined here. The back half of the experiment is conventional, and consists of electronic monitoring and tabulation of the axon’s response.

In terms of the multichannel model electrophysiology is difficult to interpret. However, a significant feature of the model is a staircase of firing thresholds. One might speculate that as the stimulus is made to approach the soma, it is finding or ultimately directing a pointer to lower and lower firing thresholds, which is to say, lower channel numbers. These low numbered channels would be more easily triggered than higher numbered channels.

Unfortunately there is easy no way to directly measure or guess the channel number associated with an action potential in passage, if indeed multiple channels exist. Again in terms of the model, a plot of channel numbers versus synapse position on dendrites (or, using different techniques, on the teledendrions) would produce a fascinating picture. In any event it is interesting that even conventional electrophysiology suggests there may be some kind sequential ordering, progression, or directional structuring that underlies a map of dendritic spines.

The model

In modeling this visual memory system I think it would be best to use automated rotating or looping machinery, just as you would in many familiar recording and playback devices. The rotating machine is the commutator. At each addressing tree, let the loftiest addressing commutators walk forward through time automatically, incrementing higher channel by channel. Rough synchronization among trees should suffice. Now, instead of hardwiring and broadcasting addresses in detail, the retrieval system can simply be given a start date/time and triggered off. A string of retrieval instructions will ensue. The system will, in effect, read itself out like a disk drive.

As a practical matter, the model of a retina of memory should probably be constructed in software. Each tree of memory can be modeled as a disk drive storing analog numbers representing 3D pixels, stacked in serial order, that is, the order or sequence in which they were originally captured from the eye. Millions of disk drives, then, each of relatively modest capacity, comprise a retina of memory. In a primitive animal one would expect to find a single retina of memory. In a sophisticated animal, many.

Let’s say the memory trees pre-exist in a newborn animal and that their twigs are unwritten. Each branch is a point in a commutator sequence, and identifies time (that is, sequence) ranges.

From the point of view of addressing the visual memory, reading and writing are, as in a disk drive, similar processes. The writing commutator walks forward through the present moments, guiding incoming 3D pixels from the eye to a series of novel addresses. To elicit a visual memory a reading commutator, which could be the self-same machine, walks forward through addresses denoting a film strip of past moments.

In effect, the pointer of the base commutator on the address generator, as it ticks ahead, is the pointer of the second hand of a system clock. Although the images are recorded at a stately and regular rate, such as one per second — the recall can be made to happen as fast as the commutator is made to sweep. And it could scan backwards as well as forwards.

How is a pixel memory deployed?

This is an unsolved problem in the model. We have to assume it happens but the answer isn’t easy or obvious.

We have stipulated what a 3D pixel memory is: Three numbers — integers — that represent a pattern of light recorded from three disks in a single photoreceptor at a particular moment in time. The three numbers are sufficient to specify the instantaneous wavelength, intensity and phase of the incoming light, as read out of a standing wave in the outer segment of the photoreceptor.

We are suggesting these three numbers are configured and stored in the brain as an addressable twig of memory — three dendritic launch pads for three action potentials to be fired down three specific, numbered axon channels. It is nicely set up, this memory, but how did it happen?

The commutator figures prominently in the problem.

The operation of an initial readout commutator in the addressing neuron seems clear. It simply counts up or down. Other commutators fan out from the initial or system counter. At the upper tier of the addressing tree, the commutators, once toggled, can tick forward “on automatic.”

But what about the commutator in the proposed memory neuron?

In the most basic model of the multichannel neuron, developed in Chapter 2, the neuron is functioning as a sensory transducer. The commutator pointer rotates up to a specific numbered channel in proportion to an input voltage or graded stimulus.

But in the memory neuron, we want the pointer to go, first, straight to a remembered channel. Then, second, to another remembered channel. Then, third, to another remembered channel. Hop hop hop. From the address neuron the memory neuron receives three signals in a sequence, via telodendrions 1, 2, 3. The data neuron fires channels corresponding to three remembered photoreceptor disk positions: 2, 7, 34.

Instead of responding proportionately to an input voltage, as in a sensory neuron, the commutator in the memory neuron is responding discontinuously to a memorized set of three channel firing instructions. So the needle of this commutator must swing, not in response to an analog voltage input, but in response to a pixel memory.

In the multichannel model synapses connect individual channels, rather than individual neurons. This suggests some other possible solutions.

It could be that the commutator is simply bypassed, so that the appropriate axon channels are hardwired to the dendritic twigs of memory. Synapses at the soma could suggest a short cut past or a way to overrule the inherent commutator.

Maybe there is some rewiring or cross wiring at the level of the dendritic synapses. To borrow a term of art from the conventional playbook of memory biochemistry, maybe the synapses are subject to “tagging.” Maybe biochemical markers delivered into the dendrites when the memory was originally recorded are specifying in some way the channel numbers to be fired.

An expensive but biologically plausible way to write a pixel memory would be to eliminate – bypass or catabolize or cleave or block – almost all of the channels in the memory neuron, leaving intact only the 3 specific numbered channels that constitute a pixel memory. All the intervening, unused channels could be dispensed with. Skipped over. Catabolized. Washed away. The commutator would then simply touch off each of the three surviving channels in turn.

For a 300-channel neuron, this rather destructive memory mechanism implies the elimination or disabling of 297 channels that don’t support a memory. The 297 channels must switch off or die off in order to create a memory neuron that remembers a specific sequence comprising just 3 numbers. Each time this particular neuron is stimulated in order to recall a pixel, it will reliably fire off, in perfect order, a sequence of three memorized channel numbers: Let’s say 14, 17, 209. These three numbers precisely describe a pixel captured by a photoreceptor positioned at a unique site on the retina at a specific moment in the past.

This destructive approach to writing memory consists of biochemically obliterating or bypassing every channel structure that isn’t part of a pixel memory. The notion of a memory based on the mass destruction of non-memories (superfluous channel numbers) seems biologically plausible. It calls to mind the large scale destruction of excess neurons and synapses that occurs naturally in the embryo and continues through the first 12 years of human life. This massive editing out and elimination of superfluous neurons in the developing brain and nervous system is called “sculpting”. From the chapter linked above:

“…sculpting may achieve quite precise ends as it eliminates excess cells and synapses …the Rakic research group has looked at the rate of destruction specifically in the corpus callosum, the tough bundle of nerve fibers that connects the two hemispheres of the brain. In the adult macaque monkey, this bundle contains about 50 million axons. But the macaque brain at birth contains about 200 million axons in this same area. To reach the level at which it functions in adulthood, the corpus callosum apparently eliminates axons at a very high rate—about 60 per second in infancy, for example. Synapses are lost at a much higher rate, since each branching axon could form several points of contact with a target cell. For the human brain, each of these numbers should probably be multiplied by about 10…”

We are suggesting here the far less Draconian destruction, disabling, or bypassing, within a neuron, of a few hundred numbered channels as a way to create a pixel memory.

Note that if the destruction of channels failed to stop just short of completion, and did not leave in place, say, three surviving channels, we would be looking at a process that resembles the destruction of neurons in Alzheimer’s and other dementias. In other words, maybe dementia, which destroys memory, is an unchecked, runaway form of a normal but destructive type of memory-writing process. Equally, dementia might result from a late life re-triggering of the normal developmental process of brain sculpting.

I have deliberately packaged the idea of a pixel memory so that it comprises exactly three numbers in sequence. (I could have picked a larger or, less readily, a smaller number.) The three-digit formulation is intentional. It rhetorically bridges the idea of a memory neuron to that of another familiar memory medium, the biochemical memory codons of nucleic acids. I am not able to push this parallelism very far, but it doesn’t hurt to leave an avenue open to the use of nucleic acids as memory media.

The underlying idea is that the visual cortex could contain two tiers of visual memory. The active memory is hardwired, and uses dedicated neurons as memory organs. But as John von Neumann pointed out, using a neuron to store memory is energetically costly. A deeper store, in DNA for example, would cost very little to maintain, and could sponge up much more information. This approach would require that one disable, rather than destroy, spurious channels in memory neurons. In this way it would remain possible to re-dedicate the memory neurons, as necessary, to a different set of memories from a different epoch or context, recovered from the second tier DNA memory.

This model suggests a Y-convergence of three neurons, not just two. One delivers addresses. One stores the data. A third neuron delivers original data from the retinal photoreceptor – data to be written in sequential order into the dendrites of the memory neuron.

Whatever specific mechanism one might choose or invent, the model requires that a pixel memory arriving from a photoreceptor in the eye be stored in, and retrieved from, at least one antipodal neuron. The pixel memory would be stored as a trio or linkage of about three distinct channel numbers.

Experiment

One interesting aspect of this memory model is that it suggests an experiment. We are guessing that the individual channels of an addressing axon are, in effect, split out and made accessible as numbered telodendrions. If there is indeed a numerical succession – a sequential firing order – of the telodendrions, then this should be detectable. We were taught that the telodendrions must fire simultaneously. Is this always true? I bet not.

Superimposed networks

Note that we have assumed there exists a double network. Above the information tree there is a second tree, a replica of the first, used to individually address each memory “twig”.

The principle of two superimposed networks, one for content and the other for control, is a technical commonplace. An early application was the superimposition of a telegraph network as a control system for the railway network. The egregious present day example is the digital computer, with its superimposed but distinct networks for information storage and addressing.

We are long in the habit of dividing the nervous system into afferent and efferent, sensory and motor, but surely there must be other ways to split it, e.g., into an information network and a addressing network. It is typically biological that one network should be a near replica of the other. Evolution proceeds through replication and modification.

Arborization and addressing capacity

The first anatomist who isolated a big nerve, maybe the sciatic, probably thought it was an integral structure – in essence, one wire. Closer scrutiny revealed that the nerve was a bundle of individual neurons. We are proposing here yet another zoom-down in perspective, this time to the sub-microscopic level . We suspect that each neuron within a nerve bundle is itself a bundle of individual channels.

It follows that the functional wiring of the nervous system is at the level of channels. Synapses connect channels, not neurons. This is why one might count 10,000 synaptic boutons on a single neuron’s soma. The boutons were not put there, absurdly, to “make better contact” nor to follow the textbook model of signal integration. They are specific channel connectors, each with a specific channel number.

The neuroanatomical feature that most interests us at this point is axon branching. This is because branching is of paramount importance in familiar digital technologies for addressing – search trees and other data structures. We have proposed a treelike addressing system for the visual memory in the brain. It is reasonable to ask — where are the nodes?

Not at the branch points.

Photo courtesy of Ann Cantelow

Branching in a nerve axon is just a teasing apart and re-routing of the underlying channels. It is not a branching marked by nodes or connections in the sense of an T or Y connected electrical branch, or a logical branch in a binary tree.

For an axon that addresses a dendritic twig of memory, all functional branching occurs at the commutator.

Any anatomical branching downstream of the commutator, such as the sprouting from the axon of telodendrions , simply marks a diverging pathway – an unwinding or unraveling, rather than a distinct node or connection. In other words, the tree is a circular data store. The datapoints are stored at twigs mounted on the periphery of a circle. The twigs are accessible through a circular array of addresses. It is analogous to a disk drive in which the disk holds still and the read-write head rotates.

Photo courtesy of Ann Cantelow

Photo courtesy of Ann Cantelow

Summary of the technology to this point

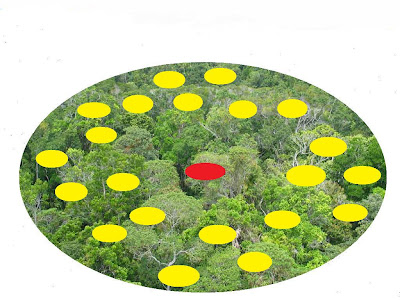

The tree in this photograph is a metaphor for the brain structure which corresponds to, and is antipodal to, a single photoreceptor of the eye. It is one single photoreceptor cell’s remote memory warehouse — a tree of memory.

Each twig is a destination with an address, a neuronal process narrowed down to just two or three channels. For example channels 3, 7 and 29, only, might constitute a given twig. Each twig is a 3D pixel frozen in time. The tree will store as many unique picture elements from the photoreceptor’s past as it has twigs.

As many as 125 million of these trees will constitute a retina of memory. We will look for ways to hack down this number, but for the moment let it stand. The point is, we are talking about millions of trees.

All these trees must be queried simultaneously with a particular numerical address, probably associated with a time of storage, to elicit firing from all the right twigs — just one twig per tree. Properly addressed, a forest of these trees will recreate, almost instantly, a whole-retina image from memory.

In a primitive animal, it would be sufficient to remember 300 images from the recent past. This could be accomplished with a single addressing neuron, a single commutator. But in a modern mammal, it will be necessary to stack the commutators. A bottom commutator can point to any of 300 other commutators. And each of these can, in turn, point to 300 more commutators. With a simple tree of neurons, which is to say, a logical tree built with commutators, one can very quickly generate an astronomical number of unique addresses. We require one unique address for each twig of the data trees.

Are there enough addresses available in this system to organize a mammalian lifetime of visual memories? Yes. Easily. Are there enough memory neurons to match the addressing capacity of the addressing neurons? Probably not. The neuronal brain that lights up our scanners is probably running its memory neurons as a scratchpad memory. It seems likely there is a deeper store.

But will it work?

The memory mechanism we have sketched is probably adequate as a place to start. It would work for a directional eye in which changes in wavelength are highly significant cues to the position and movement of a target. It is a visual memory for retaining the “just now,” a film strip comprising a few recent frames.

For an imaging eye, or a human visual memory, this memory system is not yet practical because, in its present form, it is a hog for time and resources.

Bear in mind that this model is extremely fast in comparison with any conventional model of the brain based on single channel all-or-none neurons. Two reasons: 1) It is an analog memory, and 2) it is massively parallel.

But a persistent difficulty with this model is serial recall. It appears this memory has to scroll back through all history to find relevant past images. And each image to be tested for a “hit” is composed from as many as 125 million 3D pixels. This is a huge array to deploy and compare, even though the pixels pop up in parallel. This is why van Heerden’s memory seems to be such a dream system — comparison and recall are instantaneous.

The van Heerden memory has a limitation, however, which a film strip memory does not. If a single face is presented to the van Heerden memory system, it can respond with a class picture in which the face appeared. This supplies context — a surround of useful information associated in the past with this particular face. However, the system does not automatically position the memory in time.

In a film strip memory, in contrast, progression through time is built in. If the input is an image of a shark, the film strip memory will (like the van Heerden memory) turn up an image that puts the shark in a momentary context from the past. Maybe the shark of memory is freeze-framed in the middle of a school of fish.

But in the film strip memory, the film strip can progress forward through past time. The remembered shark turns, sees you, comes toward you, looms large, opens its mouth. In other words the film strip memory provides not only context and associations — but also shows cause and effect. Here is a shark. Fast forward. Here are rows of teeth.

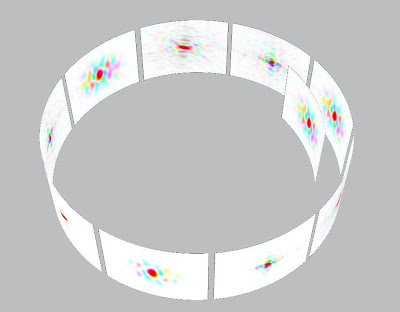

A primitive visual memory can be modeled as a film strip. An incoming Fourier pattern read from the back focal plane of the eye’s lens is tested for a match against a series of Fourier patterns recorded in the past. Fourier patterns courtesy of Kevin Cowtan.

It is reasonable to imagine that the memory we have today evolved from a simple (possibly Cambrian) film strip memory of the type we have described. For a simple animal in fixed surroundings, a film strip memory is fairly easy to model and easy to evolve — to a certain point. But the film strip model soon becomes oppressively slow and heavy with data.

How could this model be speeded up and expanded, that is, modernized? First, by taking full advantage of the Fourier plane. Second, by introducing a metamemory, in effect, a hit parade. Third, by multitasking.

The Fourier Flashlight

Let’s take a moment to orient ourselves, using the central, red DC spot as a point of reference. The red spot marks, in effect, the center of the Fourier plane. It also marks, probably, the position of the fovea.

Fourier transform of a literal image, courtesy of Kevin Cowtan.

We are looking at a structure inside a brain — the retina’s memory — and the red spot marks that part of the memory antipodal to the foveal cones. The fovea is a wonderful thing but we can ignore it in this discussion. It is the hole in the doughnut in terms of Fourier processing and filtering. In terms of natural history, it can’t tell us much. The fovea is a rare and special feature, a splendid particularity of primates, birds, and a few other smart and lucky vertebrates.

But the visual memory evolved in vertebrates that had no fovea. It seems a reasonable guess that the visual memory is grounded on Fourier processing. We have speculated in Chapters 5 and 6 that Fourier processing evolved in vertebrates as means of clarifying a blurry picture of the world obscured by glia, neurons, and vascular tissue because vertebrate photoreceptors are wired from the front.

One could pump a time-series of addresses into the whole forest of memory, but it makes more sense to address the memory very selectively, so that only part of it responds. This approach is indicated in the photo above with a yellow disk — effectively, a Fourier flashlight. (The red spot marks the position of an antipodal fovea.)

Recall that any part of the Fourier plane can be transformed into the whole of a literal, spatial image from the world.By selecting such a small part of the whole retinal output, illuminated by the flashlight, we have reduced the data storage and processing problem to a tiny fraction of that associated with the original 125 million neuron source. It is because of this holograph like effect — the whole contained in each of its parts — that Karl Lashley was able to physically demolish so much of the visual cortex with lesions without producing a significant loss in the animals’ visual memory.

Let me emphasize that the Fourier flashlight is a metaphor. It draws a convenient circle around a small population of neurons. In the model this is accomplished by addressing that small population, rather than the whole retina of memory. As the population of neurons and, thus, the flashlight spot gets smaller, the resolution of the literal image that can be recreated by Fourier transformation deteriorates. For rapid scanning and quicker retrieval, one would favor the smallest practical spot. Say a “hit” occurs, that is, a Fourier pattern scanned up from memory is found to match, more or less, a Fourier pattern at play on the retina.

At this point, the spot we are calling the Fourier Flashlight could be expanded in diameter to improve the resolution of the remembered image, broaden the range of spatial frequencies to be included, and perhaps pick up some additional and finer detail.

At this point, the spot we are calling the Fourier Flashlight could be expanded in diameter to improve the resolution of the remembered image, broaden the range of spatial frequencies to be included, and perhaps pick up some additional and finer detail.

Where on the retina of memory should we shine the flashlight? Spotlight addressing of the memory map gives us a means to accomplish Fourier filtering. For edge detection, we should select a circle of trees at the outermost rim of the Fourier pattern (and retina), where the highest spatial frequencies are stored. For low spatial frequencies, position a circle near the red DC spot.

For most animals most of the time, an enhancement of high spatial frequencies has significant survival value. Here are two images from the Georgia Tech database, the first literal, the second with high spatial frequencies enhanced.  Remark the sharp definition of the edges of the mirror frame, the clown’s arm, and of the edges of the makeup brushes and pencils. In effect, an image in which edges are soft and ill defined has been turned into a cartoon, with heavy outlines emphasizing the edges of objects. This is the information an animal needs immediately. If the animal were looking at a shark in the shadows, filtering for high spatial frequencies would make the shark’s shape unmistakable.

Remark the sharp definition of the edges of the mirror frame, the clown’s arm, and of the edges of the makeup brushes and pencils. In effect, an image in which edges are soft and ill defined has been turned into a cartoon, with heavy outlines emphasizing the edges of objects. This is the information an animal needs immediately. If the animal were looking at a shark in the shadows, filtering for high spatial frequencies would make the shark’s shape unmistakable.

Edge enhancement in visual image processing and visual memory has an additional advantage, which is that it creates a very spare, parsimonious image consisting of a few crucial outlines. This important data of high spatial frequency needs to be surfaced quickly for survival purposes.

This yellow band indicates an address map for the outer regions of the Fourier plane, where high spatial frequency information crucial to edge detection is concentrated. These are neurons antipodal to rod cells at the outer periphery of the retina. It is interesting that this neglected outer frontier of the retina might have such a critical survival benefit for the animal. The animal’s concept of “an object” arises from edge detection. The uncanny ability to distinguish the integrity of an object even though other objects may intervene is probably rooted in high spatial frequency detection in this part of the retina.

Chopping for speed

A memory that is capable of storing a film strip of images is valuable but slow. We can speed it up by massively cropping the images to be scanned for recall, using the Fourier flashlight technique described above. But one must still scan the images accumulated for “all time” to identify the objects currently in focus on the retina. If the object is, in fact, a shark, one doesn’t have time to scan through a lifetime of accumulated memories, in serial order, in order to recognize it.

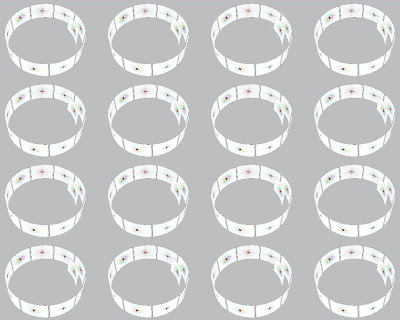

One solution is to chop the serial film strip into, for instance, one hundred short film strips. Or one thousand. Or ten thousand.

In effect we are now playing multiple Fourier flashlights upon the retina of memory. In this way one could make multiple simultaneous scans and comparisons with the incoming retinal image from the eye.

Maybe the image to be matched is an old automobile. Figuratively, one Fourier flashlight can scan for the cars of the 60s, one for the cars of the 70s, one for cars of the 80s, and one for the cars of the 90s.

This works because each flashlight is addressing a spot in the Fourier plane — where any and every spot that might be addressed contains all the information needed to match or reconstruct a whole image.

By multitasking the scans, we are breaking past the cumbersome requirement for serial, linear scanning and recall. It is possible now to see an advantage in importing from the eye a huge retinal Fourier plane. Because of its large area, the incoming Fourier pattern is open and accessible to thousands of simultaneous memory scans.Think of each Fourier “flashlight” as a projector running, from memory, a short, looped film strip. Looping is easy because the addressing mechanism is a commutator.Each projected frame in this little movie is a Fourier pattern that corresponds to (and is transformable into) some remembered object.

All these projectors run constantly. In this metaphor, the essential comparator, which is derived from the idea originally conceived by Pieter van Heerden, is a screen. The Fourier flashlights play constantly at spots on one side of the comparator screen. The Fourier plane imported from the eye plays on the other side of the comparator screen. Say the comparator has sensitivity to the sum of the juxtaposed signals on either side of the thin screen. Thus, the comparator will develop a high amplitude signal — the “Voila!” — wherever and whenever there is good agreement between a projected pattern from a memory flashlight and an imported pattern from the eye.

There are of course no literal flashlights projecting Fourier patterns onto comparator screens in the brain. The lights and projections are metaphors for processes that are carried out numerically in the model, using channel numbers for addressing, for pixel memory including phase conservation, and for summation.

If this model is viable then the photo above polka dotted with an array of Fourier flashlights is a significant illustration. It explains how we can glance at an object from a bygone époque and immediately identify it. It also explains how that same object can be pictured in different visual contexts captured at several different past moments.

The visual memory is not an image retrieved by combing through a serial archive of old, static, stored images. The memory is “live,” fully deployed in an enormous array, waiting in anticipation for reality to arrive from the retina of the eye.

What does this suggest about the performance of the system? Neuroanatomy has identified, so far, about 30 representations of the retina in the cerebral cortex. By replicating the incoming Fourier plane, one can multiply the area available for the deployment of Fourier flashlights, increasing the number and variety of the arrayed memories that wait in anticipation of an incoming image.

So many flashlights. It has a Darwinian quality. Thousands of memories are on offer all the time. Upon the arrival of a new image on the retina, one or more memorized images shall be selected. It is never necessary to discover an exact fit to the incoming image. The signal from a Van Heerden detector ascends with and reports similarity, so it naturally finds a “best fit.”

The memory of memories

The chopped, short, looped film strip memories for images can be further edited or recombined in such a way as to store, in a serial format, only useful images. By this we mean images that have been very frequently matched to incoming images from the retina. Such images are, in effect, played back again and again. This requires a memory for memories. A hit parade of useful associations. We can call such a pared down memory projector a metamemory.

The comparator’s criterion for a “hit” is likeness. Similarity. Over time, objects that happen to be alike would steadily accumulate in a metamemory. The notion is roughly analogous to the conventional concept of priming.

A metamemory based on like-kind associations will outscore the serial memories and produce the quickest recalls. In other words, it will succeed and grow. With “likeness” as the selection criterion, one would expect to see the whole system evolve as the animal matures — growing steadily away from serial recall, which is simply based on recording order, and toward recall based on association and analogy.

For example, a sea animal’s immediate surroundings – a rock, a coral head, a neighbor who is a Grouper, a sprinkled pattern of featherduster worms… all this could be stored as a readily accessible library of constantly recurring rims, edges and patterns and colors. These valuable memory strips could be allocated extra space on the screen, for more detail and higher resolution.

One purpose of this more compact and efficient strip of memory would be to help the animal notice novelty in its immediate environment – anything not familiar gliding into the quotidian scene. A second advantage is a quick read of edible prey and dangerous predators — and visual elements associated with them — lines, curves, colors, patterns and textures.

It would be helpful to have a metamemory that excerpts the recent past, the “just now.” A metamemory could be written to exclude all the trivial steps that intervene between cause and effect.

A modern creature – a Grand Prix racing driver for example – will have developed a metamemory that strings together the downshift points and apexes of every successive corner and straightaway in Monaco or the Nürburgring.

But note that it is no longer necessary to imagine a stream of memories that arrive and are stored in a linear time sequence like a film strip of a racetrack. An anthropologist might have a metamemory for the totems and icons of every culture she has studied. A biochemistry student will have a metamemory for amino acids, for sugars, for the ox-phos pathway, and branching, non-linear alternative pathways like the phosphogluconate shunt.

One can probably regress this principle, so that there come to be metamemories of metamemories, using key images as tabs, or points of entry. The comparators all operate in the Fourier plane, which is offstage and invisible to the conscious intelligence.

Replacing the retinal image

Finally, instead of simply scanning against the incoming image on the retina of the eye, seeking something similar from past experience — the metamemories might scan against each other’s products, which are images extracted from the past.

An image from a memory associated with a former boyfriend from the 1980s might include a Chinese restaurant from the 1980s. A bright red menu from that restaurant might be matched by a bright red pair of boots that seemed fashionable in 2005.

The requirement for serial recall is now exploded. We can jump from visual fragment to visual fragment, and match memories not just with the eye’s reality of the moment but also with past realities glimpsed and recorded in past moments. The fragments are serial recordings, but they are short, selected, and looped.

Conclusions

The past and present coexist in the visual memory of the brain.

It seems that a visual memory model structured in this way could mix present and past imagery into something new. In the frequency domain images can be combined and recombined and subsequently Fourier transformed into literal images never before seen. In this way the system can do more than remember. It can invent. It can create.

By arraying thousands of Fourier flashlights simultaneously and in parallel, memory recall can be made sudden.

This is a brain model that can actually function using our absurdly slow-moving nerve impulses, which have typical speeds ranging from just 60 mph to 265 mph.

The model is able to do so much work in parallel and simultaneously because of a peculiar property of recordings made in the frequency domain that conserve spatial phase information: Each tiny part one might isolate encodes and can be used to reproduce the whole of a spatial image.

For this reason, thousands of elements of an incoming Fourier plane from the retina can be separately and simultaneously compared with past Fourier plane images pumped out of memory. The model is massively parallel, incremental-analog, and massively, simultaneously multitasking.

It requires a multichannel neuron and the conservation of spatial phase. Its playground and operating system is the Fourier plane of the brain’s retina of memory. This crucial Fourier plane of the brain is antipodal to, and is a representation of, the back focal plane of the lens of the human eye.